A few weeks back, I did a new Battle of the Boards for 2022, and one of the “interesting” results I came across was that the eMMC on the ODroid N2+ I use as a light office desktop was really quite slow. It didn’t surprise me at the time, because my N2+ was prone to some random IO based hangs (iowait goes high and the system glitches), so I just write it off as a weird glitch of the ODroid eMMC and went on my way.

But it bugged me. It shouldn’t be that slow. It’s a modern eMMC interface, and I know they’re capable of respectable performance. I’ve got some other eMMC modules laying around (a few more ODroid ones, plus a spare Pine64 module or two), and they’re supposedly compatible with each other. Well, nothing like a bit more testing to answer questions!

So test I did, and I’m afraid I missed something very important with my previous tests.

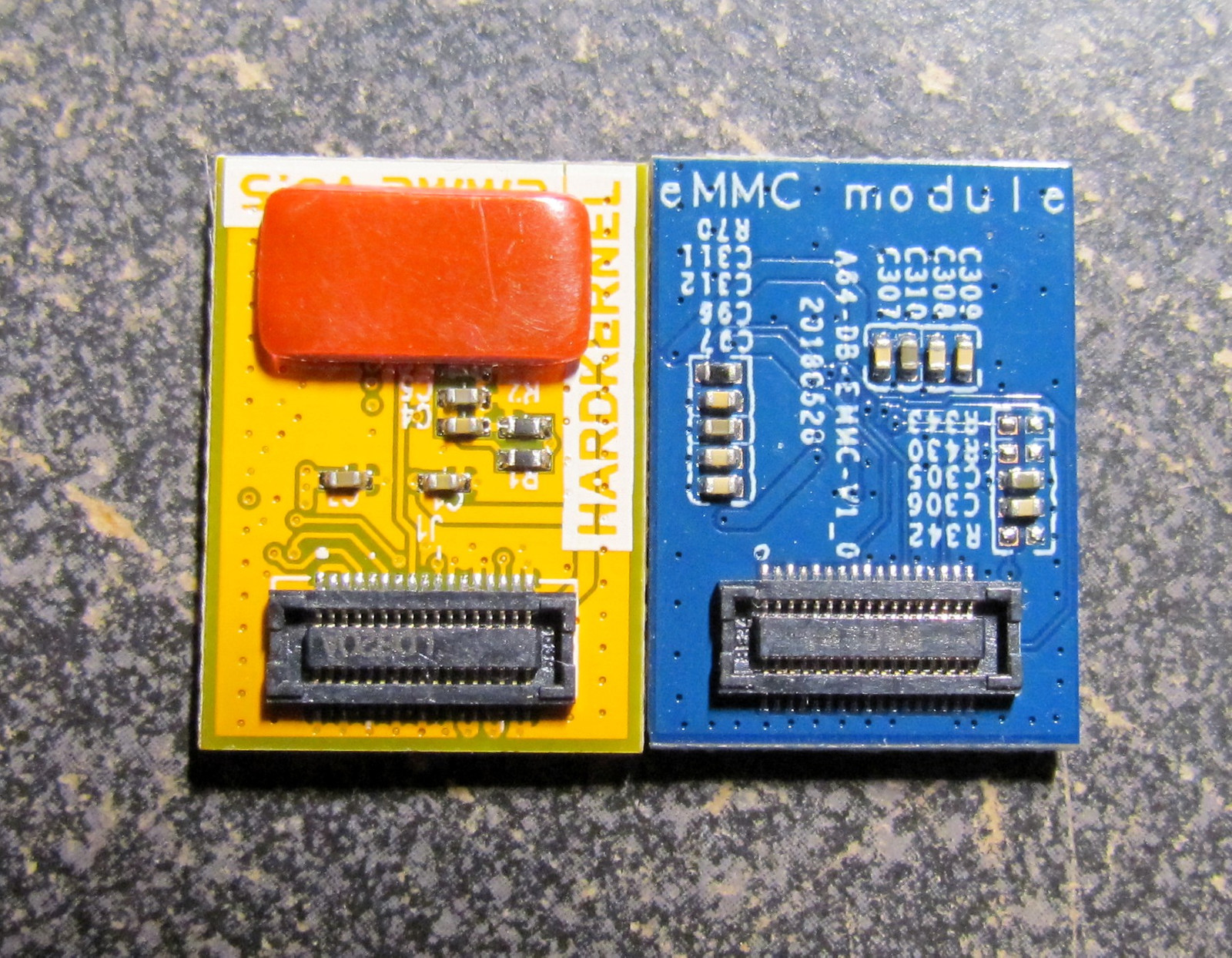

The ODroid vs Pine64 eMMC Modules

From what I’d read, the ODroid and Pine64 eMMC modules are entirely compatible with each other. They’re not really a standard, but they’ve chosen the same way to do things, and you can swap the modules back and forth. This seems entirely true, from my testing - they work just fine. But they’re not identical. They have a different layout on the back.

With the way supply chains are going, being able to go with “What’s in stock” has some value, especially if you’re trying to find one of the large, somewhat rare 128GB modules. Both companies have them, at least on occasion, but they’re not particularly easy to find at the moment. But… other than being larger, are they faster? Is there any real difference between a 64GB and 128GB module, or are they fully choked on the interface? Fear not, I’ll answer these questions and more!

Initial Benchmarking: “Huh, that’s weird…”

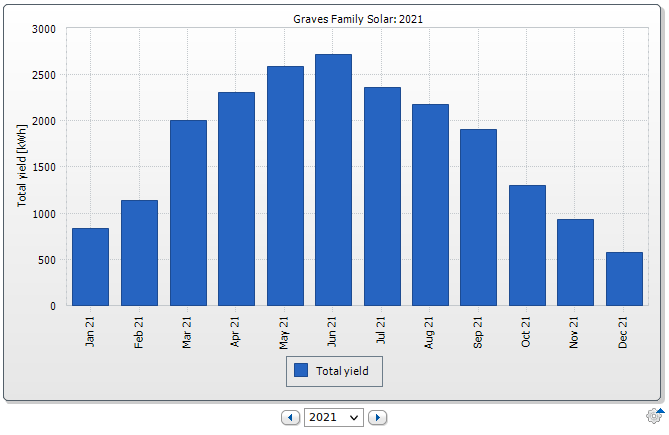

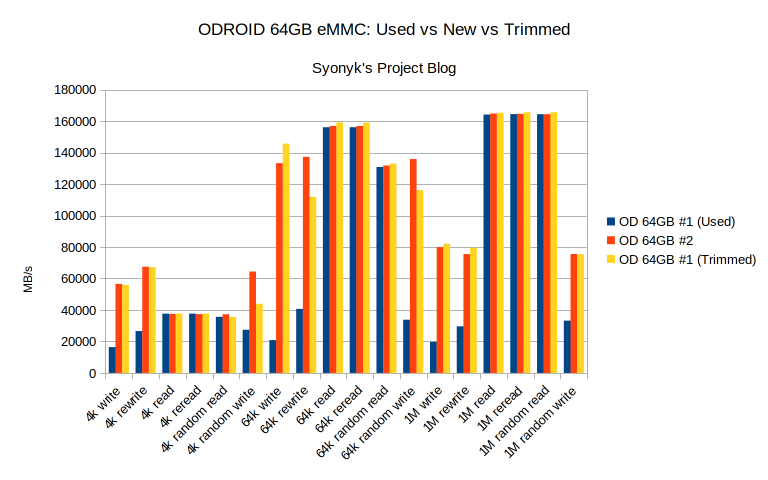

I tossed a quick Ubuntu install on a spare microSD card for the N2+, and started benchmarking the chips. I first ran the blank 64GB to compare it with the results from the other (heavily used) one, and… was immediately baffled. The blank 64GB was about what I would expect. The heavily used 64GB matched the read performance, but the write performance was just awful.

The good news here is that it’s not that the ODroid 64GB modules are glacial. It’s just that one, heavily used 64GB module is glacial… which immediately made me check my mount options. Lo and behold, discard wasn’t being set on the root filesystem mount - and I’ve beat the snot out of that card with an awful lot with ARM builds, swapping, blog renders, and all sorts of other heavy filesystem use.

One quick fstrim later (there’s really not much free space on that filesystem, and I wasn’t about to wipe my device entirely), and it was confirmed: The flash controller was mostly out of spare area to work with, and the device had just been thrashed without any trimming/discarding going on! After trimming, the performance was right back up with the other one!

Trim/Discard/fstrim/blkdiscard/???

If you’ve lived through the SSD transition and are familiar with TRIM/discard and the various tools used to cause it to happen on Linux, you can skip this section. If not, just remember that SSDs are weird. Fast, yes. Low latency, yes. But also weird, with a couple odd corner cases - one of which I was squarely in the center of.

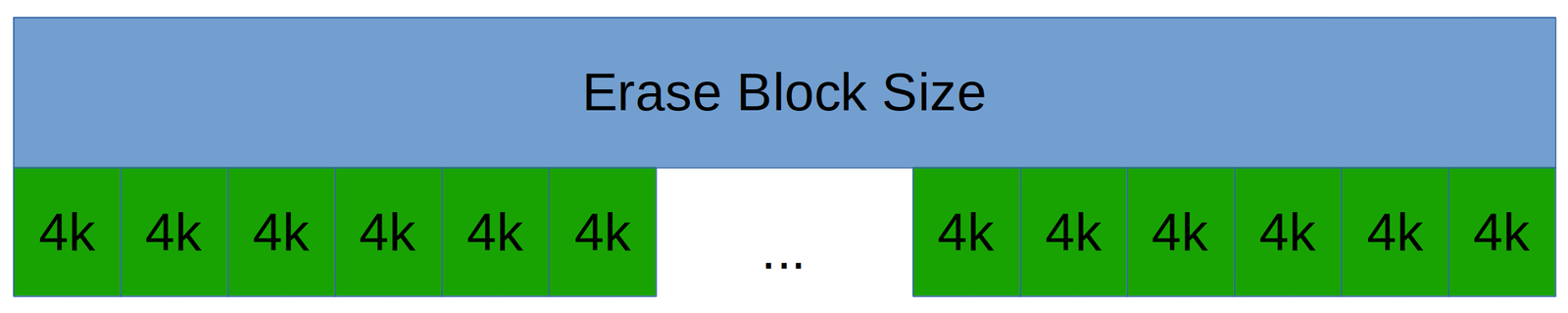

Flash based storage technologies are all similar enough, and one of their quirks is that they can only toggle a bit one direction for a normal, non-destructive write. You can toggle the bit from 1 to 0 (or 0 to 1, depending on how you care to represent it physically) easily, but you can’t go the other way easily. To go the other way requires an awful lot of voltage, and tends to disrupt other cells around the one being written, so flash devices don’t do this. Instead, they will wipe a whole block at once, preparing it for writing, and then when data needs to be written they flip the correct bits for the write.

This would be fine - traditional magnetic hard drives can only write a block at a time as well - except, for a variety of reasons, the accessible block size and the “erase block size” are radically different. You may be able to access 512 byte or 4k blocks - but the storage medium may only be able to erase a megabyte at a time! Unless you want a bunch of other data to be wiped, this is no good.

Most flash storage works around this by just keeping a set of pointers from “logical block ID” to “physical block,” and if you write new data to a block, they just write to a blank space and update the pointer. If you write block 0 three times, it’s likely to be physically stored three places, one for each version. Eventually, the drive controller gets around to consolidating this and garbage collecting, but if the drive runs out of space to take new writes, they tend to get really slow - exactly as I was seeing.

The drive has to keep track of all the blocks that have ever been written so it can return them - but, if you delete a file, you don’t really care about those blocks anymore, do you? If a lot of files are being deleted, you can help the flash controller out a lot by saying, “I no longer care about these blocks, you can garbage collect them whenever you want.” And this is exactly what the “trim/discard” messages do. When the OS deletes a file, instead of removing the pointers to it and leaving the data on a disk (which has zero performance penalties on a legacy magnetic disk), it removes the pointers and tells the disk what it can reclaim.

As you might guess, this wasn’t happening. There are several ways to tell the OS to do this, either always, or at various intervals. And I was using none of them.

The first, “big hammer” method, is blkdiscard. This just goes through an entire block device (drive or partition), telling the flash controller it can wipe everything. Effective (sometimes - some devices say they did it and then ignore it), highly destructive, and a great way to zero a flash device.

The second method is fstrim. This is not destructive - it takes a mounted filesystem, scans for blocks that don’t have active pointers to them, and tells the flash controller it can delete those. If you’ve lost performance on a device, running fstrim should recover the bulk of the performance - and it won’t wipe the filesystem.

Or, finally, you can mount a device with the discard option. In this case, the filesystem will talk to the flash controller every time it deletes a file. It’s not enabled by default on a lot of modern distros, though, for reasons I don’t understand. In the case of something like a Pine64 image or ODroid image, I understand it - most of those are installed to SD cards, which generally don’t support trim in the first place. But if you don’t turn it on and really beat on a system, you end up in a bad place.

The right answer, if you’ve found yourself in the same state I did, is to run fstrim - and then enable discard in the mount options in your /etc/fstab.

Interestingly, sufficiently new versions of mkfs.ext4 will discard blocks as they create the filesystem on a supported device!

Full Set Benchmarking

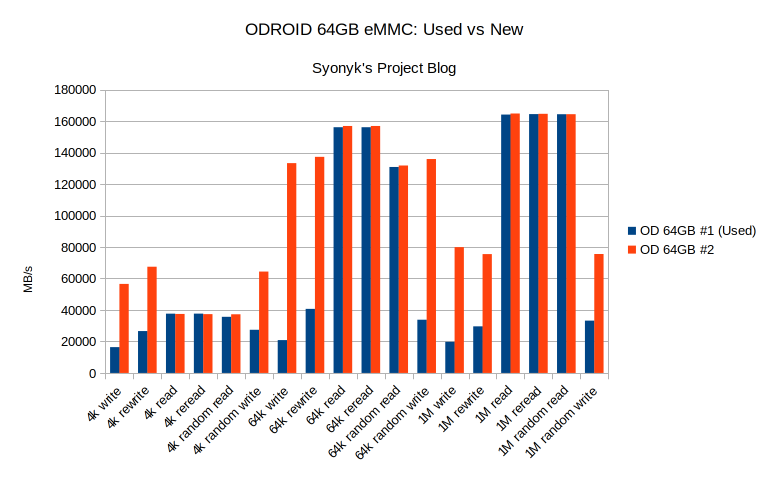

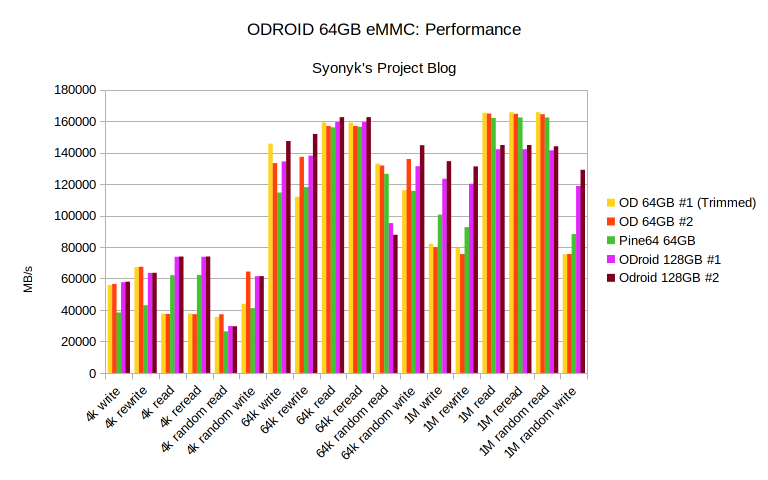

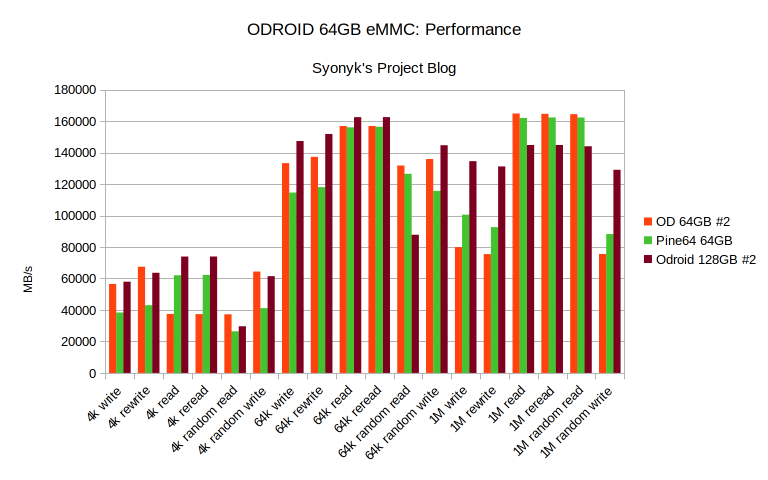

With that done, results start looking a lot more sane. Here, I’m comparing two ODroid 64GB cards, one Pine64 64GB card, and the two ODroid 128GB cards - doing the benchmarking on the N2+. I’m using ext4, default mount options, etc. Nothing fancy.

It’s a busy chart, but as everything more or less agrees (within a manufacturer/size), I can simplify it a bit, and just have one representative sample of each. Still busy, but somewhat better, and, no, you really shouldn’t let me pick colors freely (but I am trying to be consistent, per feedback from the Battle of the Boards post).

First, I think it’s clear that the Pine64 module is actually different from the ODroid modules - it performs quite differently from either of the ODroid modules. It’s typically slower in write, and about equal in read. It’s faster in 4k reads, until you get to random reads, where it’s a bit behind. I don’t think there’s a clear winner either way here between the two - if you really care about performance, you’re probably not using an eMMC module.

What’s interesting is the performance delta between the 64GB and 128GB ODroid modules. The 128GB module is substantially faster in 4k reads, though about even with the others in random reads. In the 1MB test suite, though, it’s consistently faster in writes, but also consistently slower in reads. I assume this means it has more places to write data, but also more metadata to sort through during reads, though I’m not sure it makes enough of a difference to any sort of practical use.

Also, I’ve not loaded the modules up with heavily multithreaded read/write loads to see how they perform. If you need reliable performance in that sort of load, you probably should be using some sort of NVMe SSD with a far fancier (and rather more power hungry) controller.

But… of these? I think the right answer is to just pick what you can find, of the desired size. I’d like to test a Pine64 128GB module, but they’re out of stock. Of course, the ODroid 128GB modules are out of stock now too. Supply chains and all. But they are interchangeable, and they do perform in at least the same general ballpark as each other - which is useful enough.

But Wait! What About F2FS?

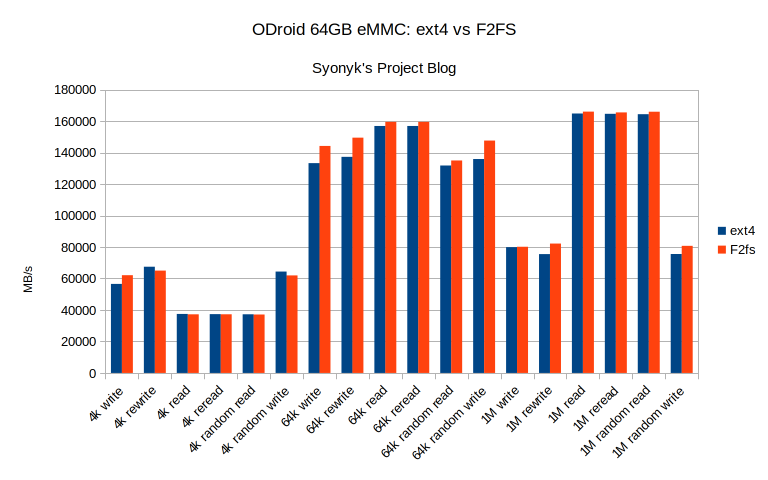

Of course, if you were screwing with low grade eMMC controllers at a certain point in history, you’ve got your hand in the air - “But what about F2FS?” F2FS (Flash Friendly File System) is a log structured filesystem that is designed to work better with low end eMMC controllers that can’t really handle the complexities of a modern filesystem - think a cheap Android tablet, and trying to extract some less-than-absolutely-abysmal performance from their storage. Or, an old worn out Nexus 7, same problem.

Well, head to head, same exact eMMC, it’s worth a tiny bit of performance. Is this enough to be worth it over using a dead-reliable filesystem like ext4? I’d say “Certainly not.” Again, if those little bits of performance matter to you, get a better storage device.

Trim Your Storage!

On the other hand, what this really shows is the need to trim your eMMC (and SSDs - even a recent Ubuntu 20.04 install doesn’t seem to have the discard option enabled, though it’s less of an issue for a desktop SSD). I expect it’s not enabled because of the risk of data loss with ill-behaved cards, but if you want performance, especially on a heavily thrashed little eMMC module, you’re going to need it.

Or, at least, run fstrim on a regular basis. That works too. You can do it on a schedule, or just every now and then if you feel the disk slowing down, or if you’ve done something like a couple kernel builds and then deleted all of the build tree.

Just another day in the joys of gutless wonder ARM computing that makes up more and more of my life!

Comments

Comments are handled on my Discourse forum - you'll need to create an account there to post comments.If you've found this post useful, insightful, or informative, why not support me on Ko-fi? And if you'd like to be notified of new posts (I post every two weeks), you can follow my blog via email! Of course, if you like RSS, I support that too.