It shouldn’t be a surprise to people I talk with regularly that I’m more than a little bit skeptical of the state of the modern tech industry (despite having worked through a range of it and still doing something that can be described as “tech” for a living). Lately, though, I think I’ve put my finger on the core of the problem. Consumer tech has crossed over from “diminishing returns on investment” to “negative returns on investment” - and this explains an awful lot of what we’re seeing going on right now. If I’m right, it also means that things aren’t going to get any better unless we change track drastically - but I’m far from certain that those actions are even going to get generally considered, much less actually happen.

Hang in with me, and I’ll explain what exactly I’m talking about.

The Law of Diminishing Returns Over Time

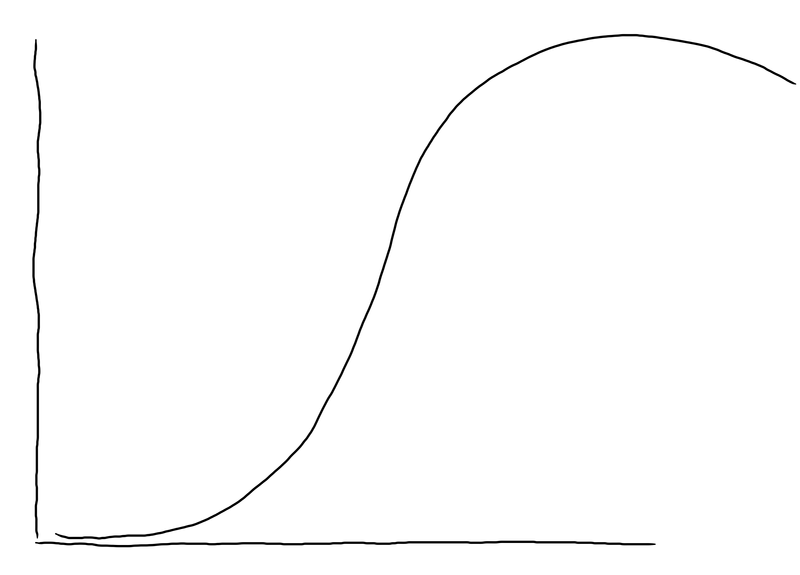

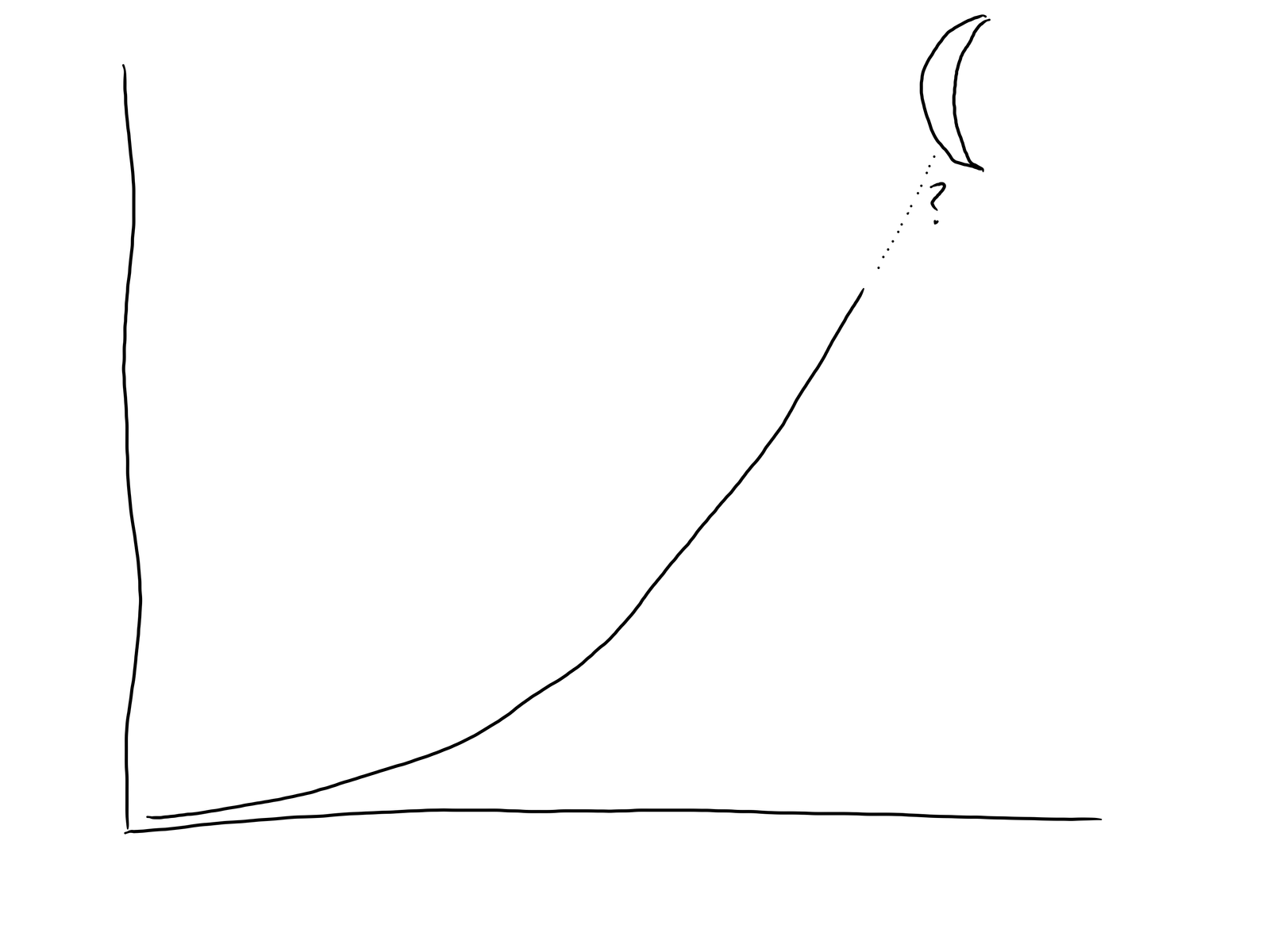

I expect a lot of people have heard of “The law of Diminishing Returns” before. It’s the concept that, for each incremental investment of some resource, you get more of the desired outcome - but as time goes on, the amount of desired outcome you get for each unit of investment decreases, and eventually starts dropping. I want to explore this curve in a bit more detail, with a focus on how each part of it tends to be discussed in the media and considered in culture.

The curve starts well enough. There’s some new field being developed and explored (technology, oil, financial, personal health, alcohol consumption - it really doesn’t matter, you can apply it to just about everything and you’re probably going to get something useful). There’s an investment (time, money, resources, skill… again, this is just broadly applicable). You get some return on that investment - and as time goes on, it looks like the more you invest, the more return you get! You’re learning how to do it, getting faster and faster. Life is amazing. This is going to revolutionize everything! Alcohol is incredible!

A lot of the time, people will look at this part of the curve, observe that it looks like the start of an exponential curve, and declare it as such. If you follow an exponential curve, it means that for every unit invested going forward with time, you’re going to get more and more return. You’ll learn how to do it better, machines will learn to do it, AI will develop more AIs, etc. Soon, why… just look at the curve! It’s going to the moon and beyond (if you just HODL on!). I’m sure you’ve seen this sort of argument made - and if not, just go read anything by Kurzweil and the transhumanists. But you probably don’t have to - you likely know the refrain.

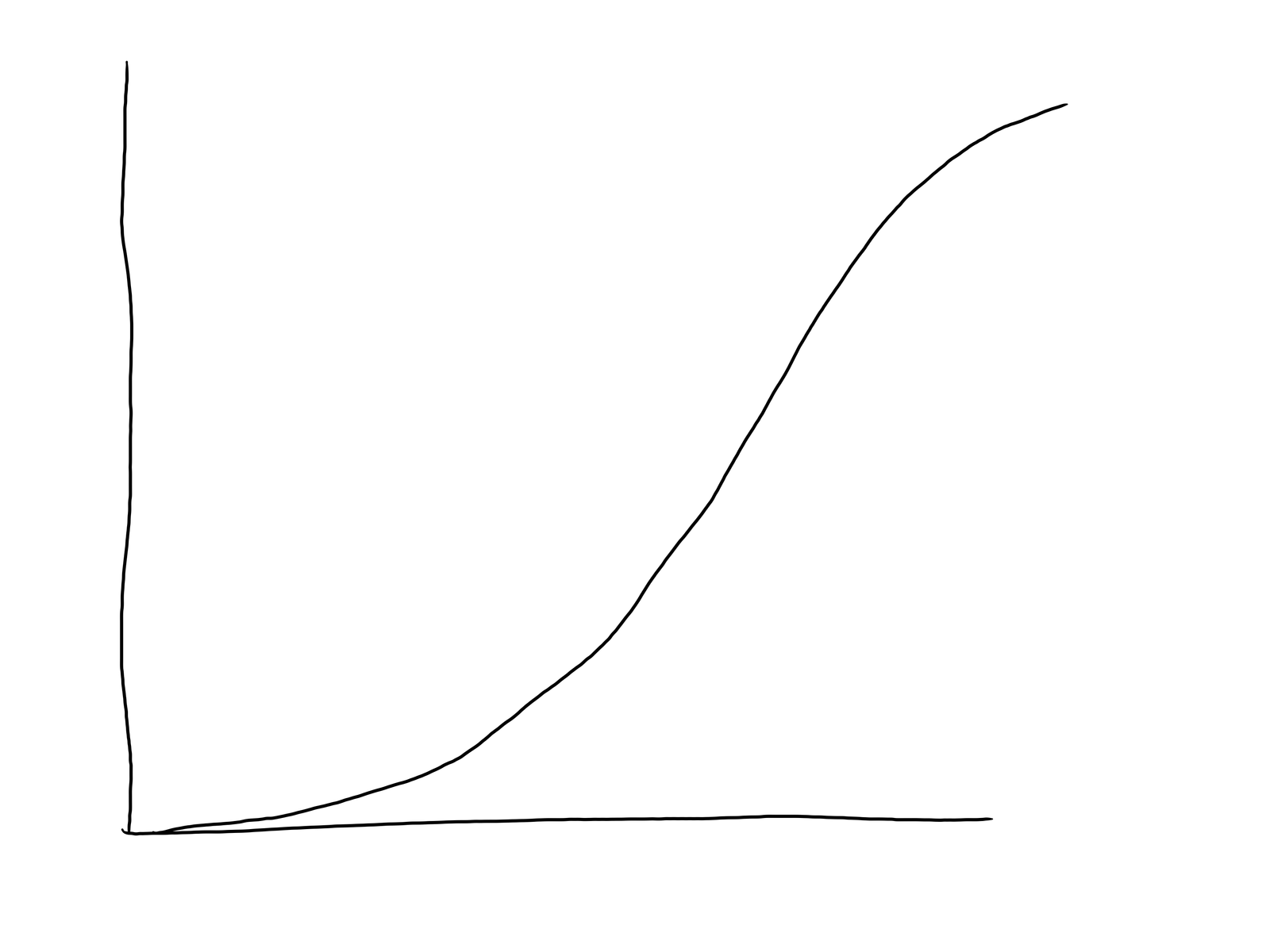

The problem is that soon enough, the curve starts looking a little bit more linear. It’s not really “bending up” like an exponential curve should. Eventually, it becomes clear that exponential growth isn’t going to happen - but that’s fine, really! Linear growth is still growth, so we’re going to need more resources to get to the hypothetical moon than we would have needed for the exponential case, but we can still get there. It’s a mature technology, and we can just continue iterating on it, continue improving, and this will work fine. Of course it will continue advancing, we’ve got a tab at the bar, what can go wrong?

Except… eventually, even that linear increase starts to “bend down.” And now you’ve got a problem. Now, for each extra unit of investment, you get less and less for each unit investment - the “diminishing” bit in diminishing returns.

Of course, this is just a temporary (transitory!) situation, right? A bit of R&D spending, a bit of reshaping the path, and we’ll be right back to growth, of course. It’s just a temporary roadblock, and {insert some list of totally unproven concepts and some list of concepts not yet proven concretely impossible} will totally fix the issue. We just need a bunch more investment, about yesterday, likely involving huge amounts of debt from True Believers, and Growth Will Return! I’ll casually gesture in Intel’s direction here…

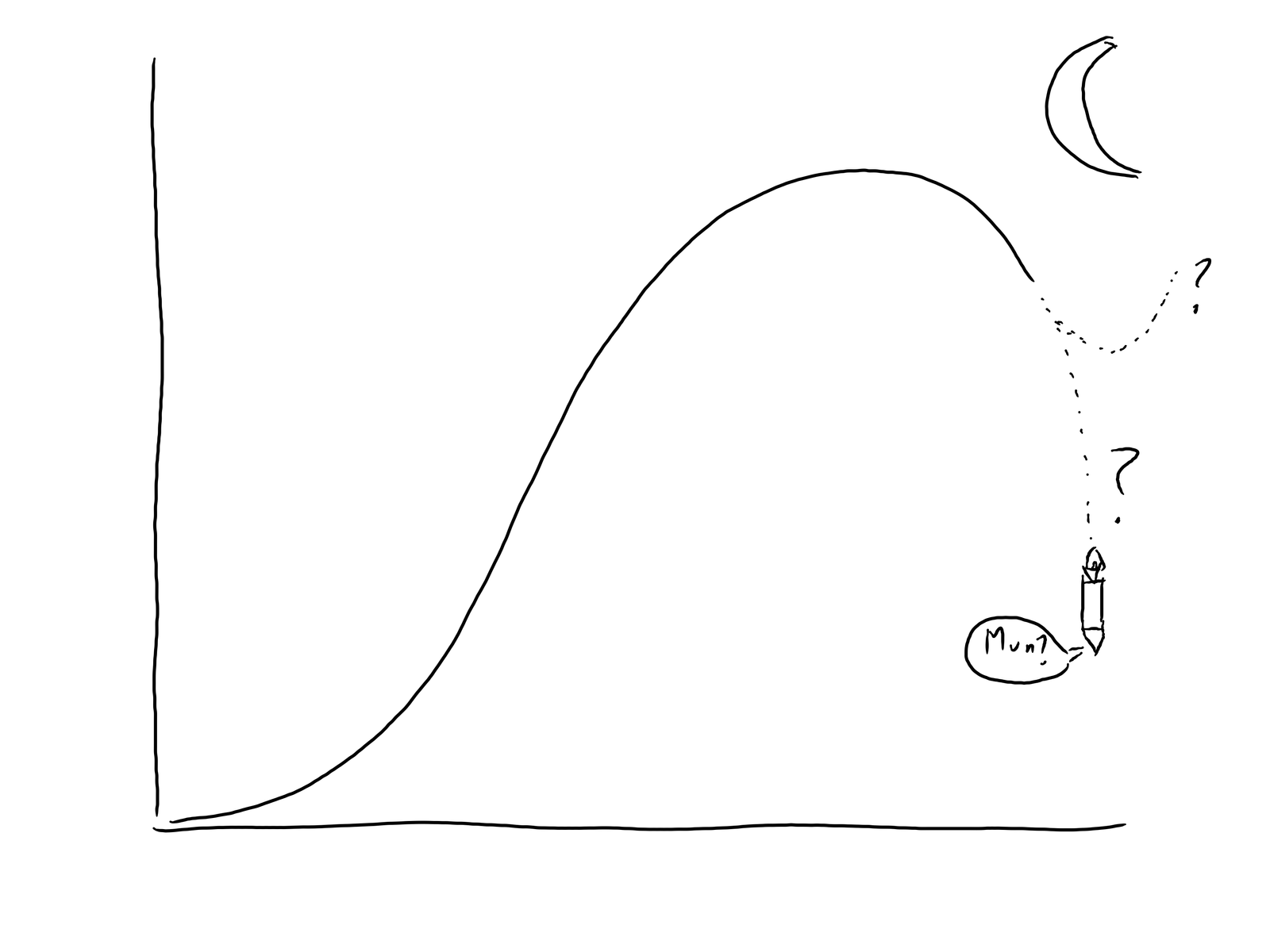

When You Hit Decreasing Returns

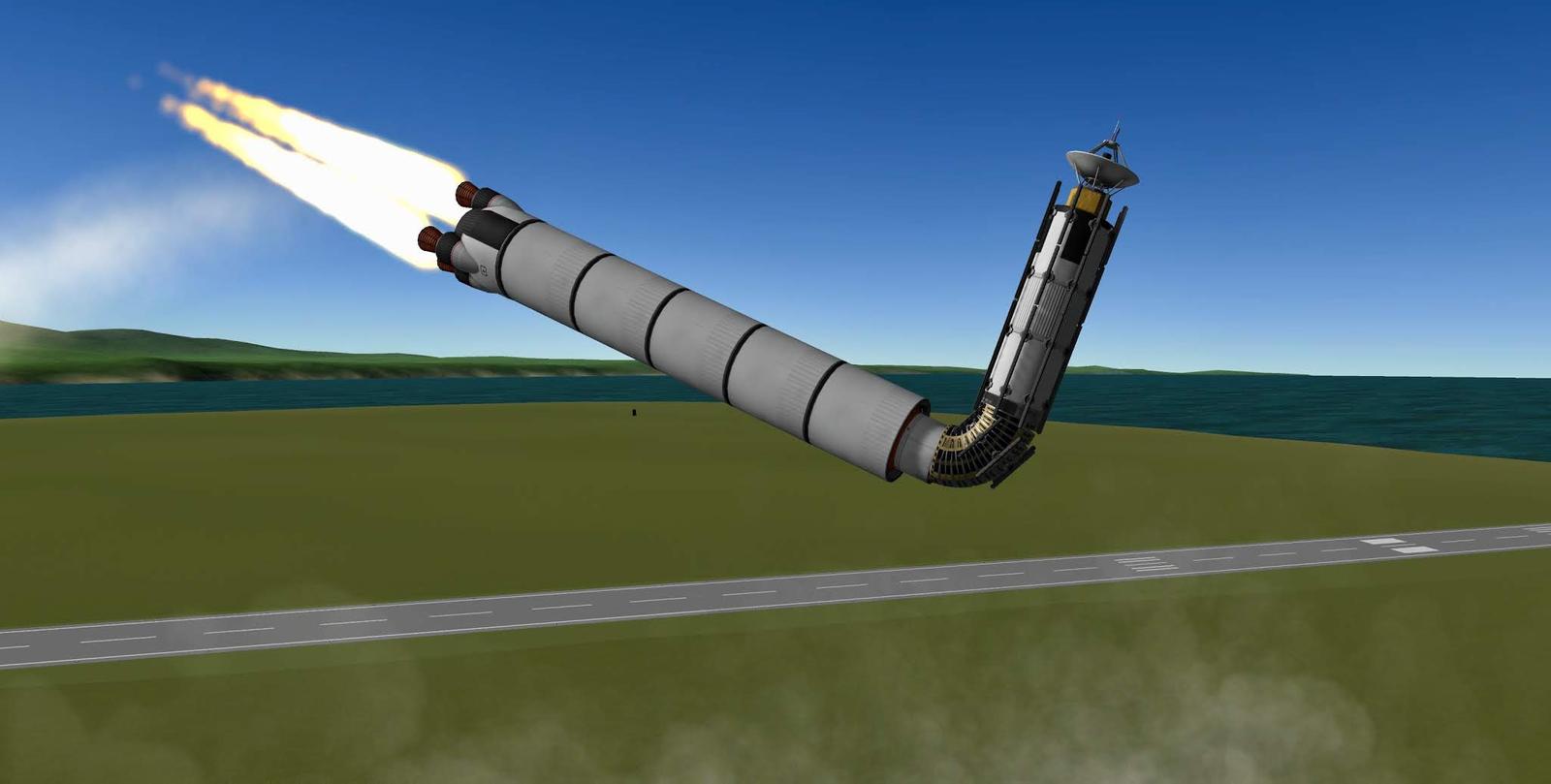

Of course, if you ignore the lessons of reality and continue investing, continue pushing, continue trying to get back to growth, you end up in a really nasty part of the curve: The region of decreasing returns. This means that you’ve passed the top, and now every additional unit of investment you make means things are actually getting worse. You’re going downhill. Not only has growth stopped, you’re actively going back down the hill. If you recognized you were here and quit, well… OK. Except, you can guess the problem. Nobody is going to recognize this and quit. If you’ve ever woken up not having any recollection of the end of the previous evening, but at some point “Yes, I do believe another drink would be a great idea!” crossed your thoughts or lips, you’ve personally experienced the fact that “more” does not always improve the situation - no matter how amazing the first few drinks were.

Of course, there’s no shortage of people insisting that progress will continue if you just. Usually that involves giving the people making those claims a lot of money/power/etc, and vague statements that are virtually impossible to hold them accountable to.

At this point in consumer tech, I think we’re in this region of the curve. It’s obviously a somewhat niche, rather unpopular opinion (especially among those who make their money trying to push the curve), but hear me out for the rest of this post, because I intend to make my point in a number of different areas, and hopefully you’ll see the parallels to other areas too.

But Why is This Happening?

What causes this? Opinions differ, but in the context of the tech industry, there are some terms of the art that I think explain things quite well.

-

Technical Debt

-

Increasing Complexity

-

“Our code base is how big?”

-

“I don’t care, ship it, we’ll patch it later!”

If you’ve ever worked on a large software project (or, even better, have been part of creating a large software project from scratch), you should have a feel for this. Early on, it’s green fields, you can make huge progress quickly, you don’t run into any compatibility issues with other parts of the project, and you generally have a grand old time making vast improvements in a day or two of coding (and probably a few TODOs). Over time, this progress gets slower, because you can’t just randomly break things someone else relies on. People leave, new people join, some people write documentation, some people don’t, you develop testing frameworks, you write tests, you badger other people to write tests, someone starts commenting out tests that don’t pass, and eventually you find yourself contemplating some refactoring or another that will touch everything, and it’s just dreadful because you know it’ll break something important, but you just don’t know what will blow up - and there’s not enough test coverage.

Progress has slowed. There’s nothing wrong with a mature piece of software (actually, there probably are a lot of things wrong with it, and no, blowing it away probably isn’t the right answer either), but you can’t take those early, green field days of progress and assume that they’ll just last indefinitely. Reality doesn’t work that way - as proven, repeatedly, by tech startups. And how you deal with a mature project should look somewhat different than, say, “Move fast and break things.”

Or: Piles of Complexity on Top of Complexity

Another way of looking at the same problem is to consider that our tech stack is a pile of complexity, on top of a pile of complexity, that exists to solve problems created by the last pile of complexity, that was created to help improve performance to deal with the previous complexity, that… on, and on, and on down.

As the complexity increases, and the teetering tower of complexity grows ever taller, fewer and fewer people can understand the whole thing (I’d argue we’re 25+ years past the point where one person can really understand a software/OS/hardware stack for general purpose computing), and there are more and more nasty little corners where the unexpected teeth of complexity come out and bite.

I’ll pick on Intel here as a first example. Over the past few years, they’ve had to add an awful lot of software mitigations (complexity) for the fact that their microarchitecture leaks like a sieve if you know how to phrase your questions properly. All the boundaries that used to be considered security boundaries - between processes, between a user process and the kernel, between virtual machines, between the OS and SGX enclaves… ALL of these have come crumbling down in the face of particularly formed queries of the architecture.

So the new complexity exists to fix the problems caused by the complexity of the microarchitecture. Why was the microarchitecture so complex? To get more performance out of the diminishing returns of silicon scaling. Why do we need more performance? Because software has gotten heavier just as fast (or faster…) than hardware has gotten faster.

Remember, most of what we use computers for today, we could do on a single core, 750MHz Pentium III. Web, chat, video, programming, etc. And we could do most of that, even, on a 66MHz 486. Maybe a bit less video (RealPlayer was a thing, and if you’ve never experienced RealPlayer on a modem, count yourself somewhat lucky), and MP3 decoding struggled, but once you hit a few hundred MHz of single core performance, you could do just about anything we use computers for today. We just didn’t have layers and layers of complexity (I’m looking at you, Electron…) sucking all the cycles between typing and characters showing up on screen.

We can look at the never-ending security flaws present in software too. Despite decades of development, despite ever-better fuzzing and analysis tools, despite decades of improvements in red teaming software… we still have massive security flaws. Sometimes they’re in new stuff (Apple’s airtag XSS injection), sometimes they’re in old stuff (who cares about the Windows print spooler anyway?), but they’re a result of complexity. Instead of focusing on securing existing code, the emphasis in the tech industry is on adding new features. Sure, we get MeMoji that can animate an animal face to match your facial expressions, sort of… and iOS devices are still vulnerable to remote, 0-click, full pwnage remote compromise. You’ll excuse me if I think this set of priorities is backwards. But the features must flow, because that’s how tech companies promote. Ship it, get promoted, and abandon it to some other schmucks. There’s simply no glory in maintaining old, stable, well used things like Reader. And we lose well tested, mostly bug free, stable software as a result.

Or the Automotive World…

We can see the same sort of thing going on in the automotive world. There’s vast, vast amounts of complexity in any new car, and while some of it is suited to getting down the road, with more safety, on less energy, an awful lot of it isn’t. Ford claims to have 150 million lines of code their 2016 F150s, and everyone else is going to be similar. That’s ten Windows 95s worth of code - for a car!

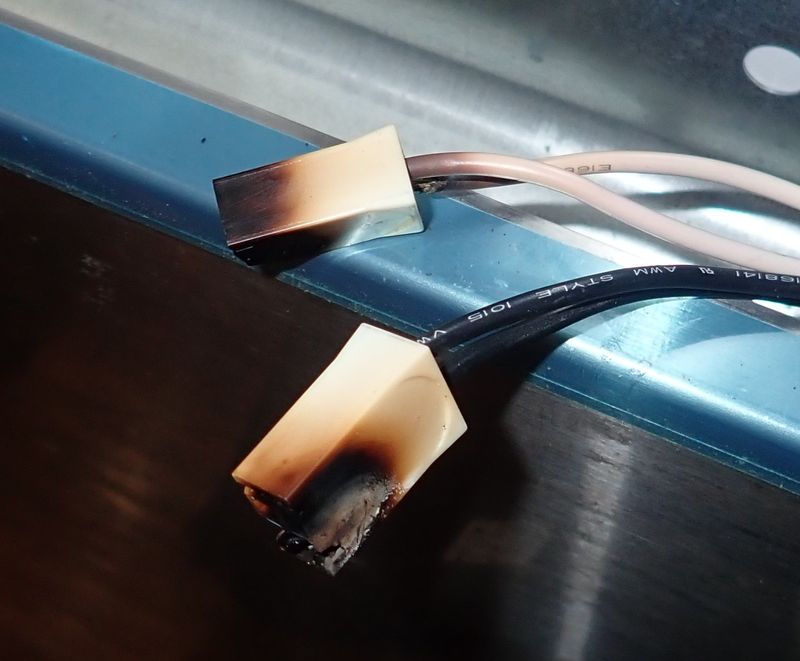

What’s the solution to this sort of complexity? It turns out to be largely “Ship first, patch later.” A couple years back, everyone was seemingly in awe about how Tesla could OTA update the brakes on the Consumer Reports test Model 3 to fix poor braking performance in their tests. What never seemed to get asked was, “How in the hell did you ship a near-production car with faulty brakes that you could easily fix in software?” “Braking” is the sort of really, really important function of a car that probably should be well tested long before release, and probably shouldn’t be easily updated via a remote software update! I like my brake controllers well known and trusted, though I’m also insanely tolerant of either direct mechanical or hydraulic linkages…

Fast forward a few years, and companies can’t even ship cars because they can’t get the chips for the infotainment systems, the digital gauge clusters, or various other things that, as far as I’m concerned, were just fine as simpler mechanical interfaces. They don’t seem to have problems dying early, which is better than I can say for someone’s MCU with logging blowing out flash’s write lifespan in less than a decade… or their “What’s the point in automotive grade, just ship laptop parts!” design decisions overheating and failing. Again.

I’m told fancy connected systems in cars are nice. But you know what I think is nicer? Not having a car in which someone can remotely compromise it through the head unit. A car is too important to leave connected to the cell networks, at least with how we do software! But, don’t worry, they’ve added more complexity to new cars to hopefully prevent this attack… caused by complexity. It’s fine, right?

Is Your “Phone” Really Better?

Wrapping back around to the start of this post, there’s no question at all that a modern smartphone can accomplish far, far more than a landline, or than a candy bar phone, or even a flip phone. But I’ll ask the question, if you’re old enough to remember landlines - do you remember having conversations on phones? Calling your friend and just talking for hours? I promise you, it was a thing even into the late 90s - and, if you don’t recall it being a thing, and it sounds utterly horrid, I can also tell you that it wasn’t at all horrid. Landlines, especially in town, were very low latency compared to a modern cell phone - and latency seems to be getting worse.

It’s now very common to “step on each other” while talking, because you’ve got hundreds of milliseconds of (often randomly changing) latency across the link. That wasn’t the case with a landline unless you were calling across the country or around the planet (or phreaking your way around the globe via satellite links to deliberately create the delay). Cell phones are objectively worse at talking to people, because of all the focus on data delivery now (which is less latency sensitive then audio) - though I understand modern “landlines” are almost as bad, because they’re all packet switched to a central router too, instead of “calling your friend across town” being circuit routed within your local town.

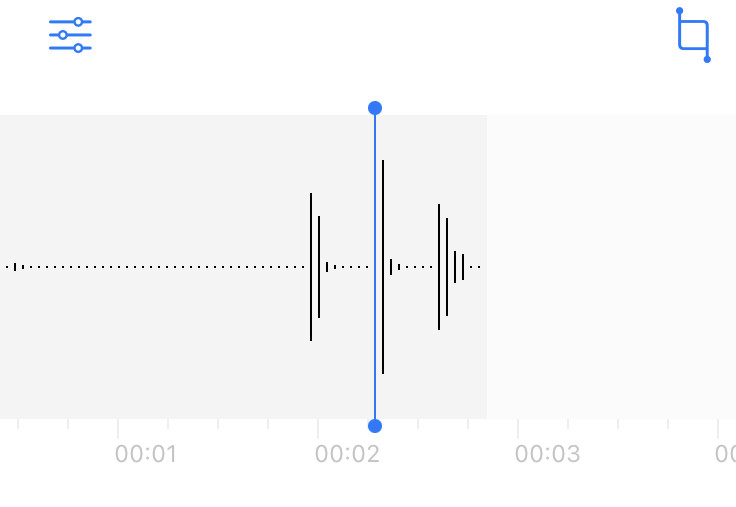

I couldn’t find good numbers, so I did an informal test myself. Two phones, on different carriers, next to each other. One muted. I “clicked” loudly, which is a sharp transient impulse from silence - and recorded that, and the time it came out the other phone’s speaker. Near as makes no difference, it was 300 milliseconds. That’s 375 feet in air, or rather more than your standard football field, with endzones. Of lag!

Try it yourself. Report back.

Anymore, for most people, a “smartphone” is just a black mirror you buy (a high end one costs more than most of the cars I’ve owned in my life), which you pay for monthly service on (also not cheap), that’s then used by a range of other people to try and turn your attention/eyeballs/etc into their money via advertising delivery. In exchange, they gather as much data as they possibly can on where you are, what you’re doing, who you’re near, and while I don’t think that most apps are actually listening to conversations, the reason people seem to think they are is because there’s enough predictive information extracted to make it seem that way.

Unless, of course, it’s a “Smart TV.” Then it probably is doing all those evil things you’d rather your equipment not - and certainly doing ACR (Automatic Content Recognition) of any connected inputs to report up what you’re doing. Your DVD? Logged and reported. Gaming? Logged and reported. SmartTVs are not to be trusted in the slightest - but good luck finding some TV that isn’t “smart.”

Again, as time has marched on, we’ve gone to ever-more featured devices that are ever-worse at being the “phone” things we still insist on calling them. Did we stop talking on phones because the alternatives are more convenient? Or because talking on phones has gotten worse?

Where Will Things Go?

Maybe I’m entirely wrong here. Maybe technological progress will resume, we’ll… find some way past the awkward fact that subatomic transistors don’t work, adding fins gets more expensive (on microchips, cars, and rockets), and software written on 7 layers of abstraction doesn’t really work properly. Or maybe I’m right, and things will continue to just clunk along, getting slowly worse with every new release, boiling the frog (us) slowly.z

I expect we’re near the end of hardware performance improvements. Things will get marginally faster on benchmarks, a handful of things might make use of it, but we’ll see more exotic stuff like vector engines that are only useful for a handful of tasks (and don’t you dare ask what the rest of the chip performance does when AVX1024 comes online), but I doubt there’s more than a doubling or two left in terms of single threaded performance (and most of those improvements will be from gigantic caches - seriously, the M1’s L1 caches are insane!). We’ll see software continue to just get slower over time, with more layers, more bugs, more “… how could you have missed this basic sort of security bug?” bugs, and more “Well, I hope that wasn’t aimed at me” sort of catastrophic security exploits.

But I just don’t see how we can continue along these paths, adding complexity, adding more and more negative features, just… “progressing” into the future fewer and fewer people seem to want. Unless you’re profiting massively off all this - then it’s very, very exciting, except for the part where fewer and fewer people seem to want to be a part of your profit generating enterprise. Maybe pivot to VR or something? It’s the tech of the future! Just like it has been since the 1980s…

So What Should We Do?

Well… good question. And this is the hard part. If the world is careening off madly in one direction, and you think that direction is insane, then there are a few options.

-

Join them, because that’s where everything is going, and it looks like fun!

-

Ignore them entirely and do your own thing, forging your own tech path.

-

Find ways to resist the madness, while still gaining some of the benefits of the tech path.

-

Probably a few other things I’ve not even considered but might make a good conversation in the comments.

For the past few years, and rather more aggressively lately, I’ve been trying to plot a course through the third option - resisting the madness, and trying to find some less-evil ways of using the modern tech systems without fully engaging in them. I’m going to be talking about a few aspects of this in more detail in various future posts (and I’ve covered some in the past), but I think the time is long since past to really sit down and figure out where I want to stand regarding modern consumer technology, and I’ve been thinking about it for quite some time.

Unfortunately, with the paths that modern consumer tech is heading, I increasingly find myself squeezed into fewer and fewer paths that I consider remotely sane - and even some of those have closed recently, or look to be closing in the near future.

I’ve been trying to reduce my dependence on the bleeding edge of modern consumer tech, and trying to find non-objectionable ways to use that which remains. There are certain things I simply can’t do in this set of constraints, and I have to be just OK with not doing those things (they tend to involve tasks like modern flagship games and high res video editing).

Finding Simpler Solutions

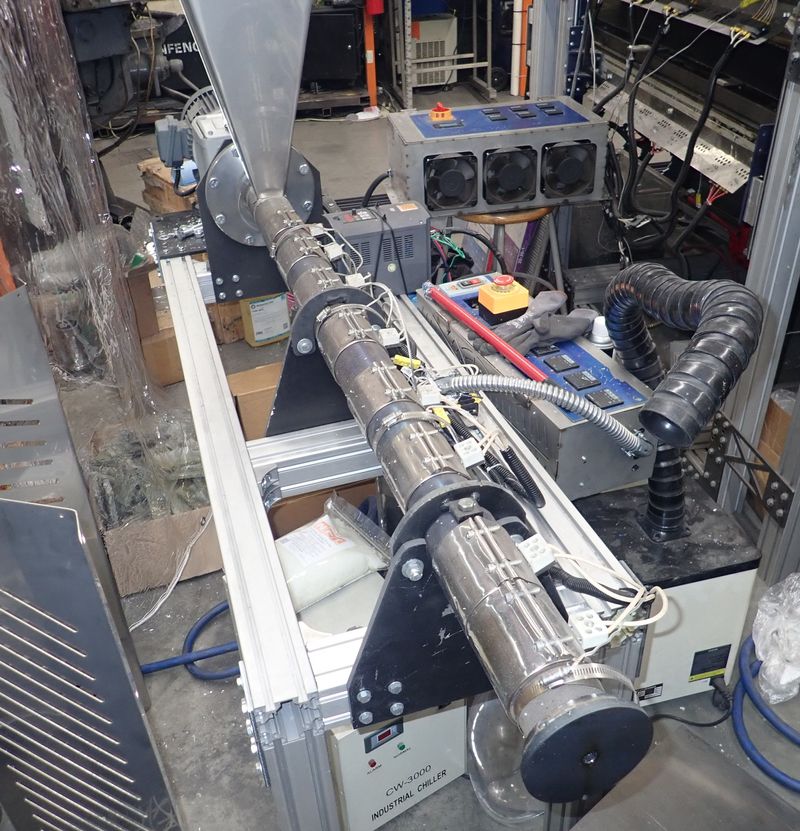

In a lot of ways, though, I think it’s time to start mining the past for the “simple solutions” we used to run. You know what chat system doesn’t struggle on a slower computer? IRC. It’s still the same as it’s always been from the 90s and on - and the clients haven’t changed an awful lot either. But it works. And I’ve hosted a “big enough to be useful” IRC network on basically nothing (a few dozen users on a pair of 16MHz 68030s).

There’s been what looks like a bit of a return in tech circles to the “old web,” of which I’d like to consider this blog part. Simple pages, lightly hosted. If you want to go even further, there’s the Gemini project.

And maybe we need to start looking at solutions that just don’t scale quite as well. Not everyone needs to be on the same platform. Perhaps something like Matrix, where “dozens of people” can share a server, with federation working to other servers, should be part of the future instead of the “everyone in one place, all being datamined” approach to communications that seems so common today.

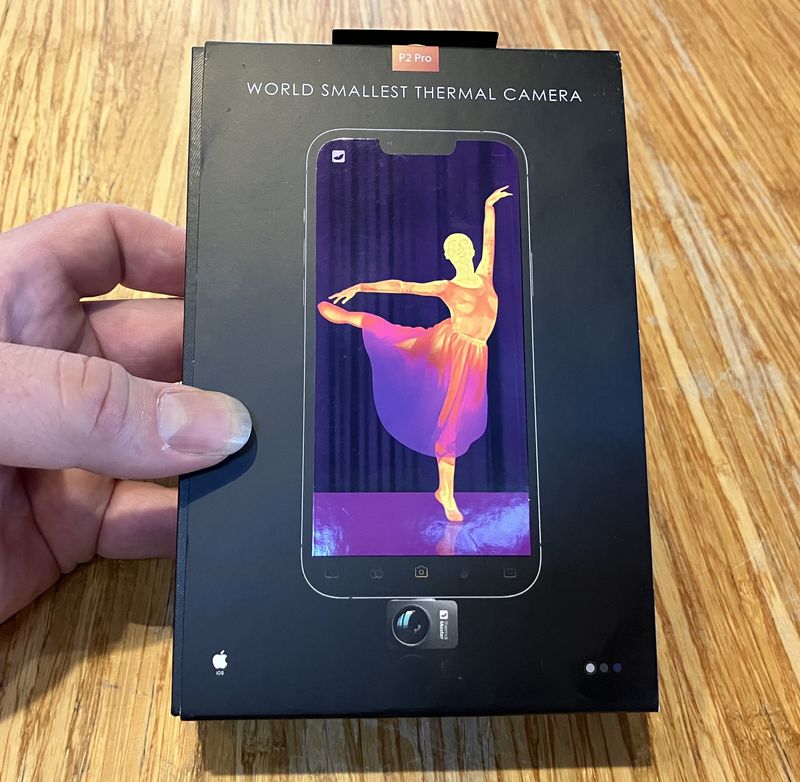

I’ve also been trying to go back to single function devices - preferably obtained used. Not only do they tend to be refreshingly good at their jobs, they don’t tend to get randomly obsolete as fast. My daily e-reader was released in late 2013, and it still works just fine to render text on an e-ink display. I’ve gone back to standalone cameras, and my “daily driver” camera for long range photos was released in late 2010. I expect these devices to continue working long into the future, and with either no networking (camera) or very limited network functionality (Kobo), I’m not that worried about them in terms of security - though the Kobo is still getting regular software updates!

I’m open to ideas and discussion. I just don’t see how the piles of complexity can keep building before something topples over.

Comments

Comments are handled on my Discourse forum - you'll need to create an account there to post comments.If you've found this post useful, insightful, or informative, why not support me on Ko-fi? And if you'd like to be notified of new posts (I post every two weeks), you can follow my blog via email! Of course, if you like RSS, I support that too.