It’s another year, and for me, that means it’s a good time to start with another Battle of the Boards - this time, with the Rock5 included! I’m going to be talking more about these boards in the coming weeks and flushing out some old posts on some older SBCs that I still use on a daily basis. But one could comfortably call this post, “The Rock5B Crushes All Others.”

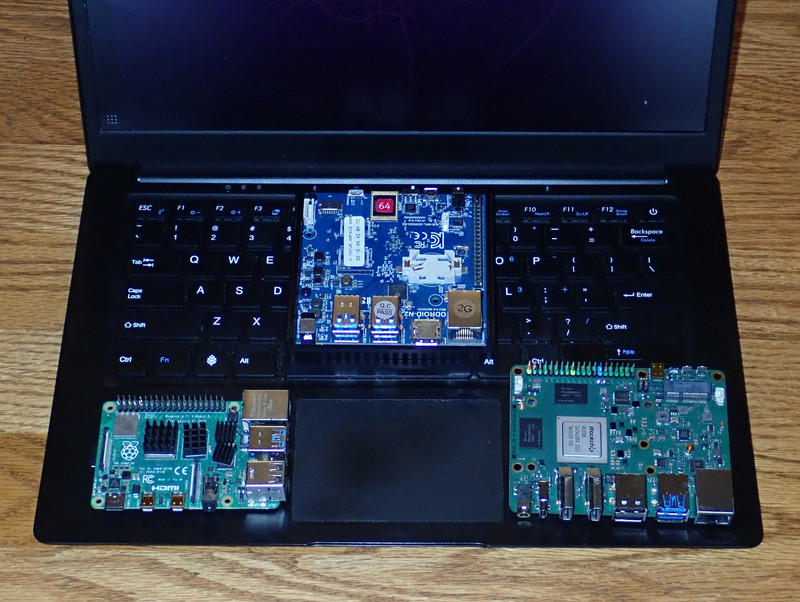

The Systems

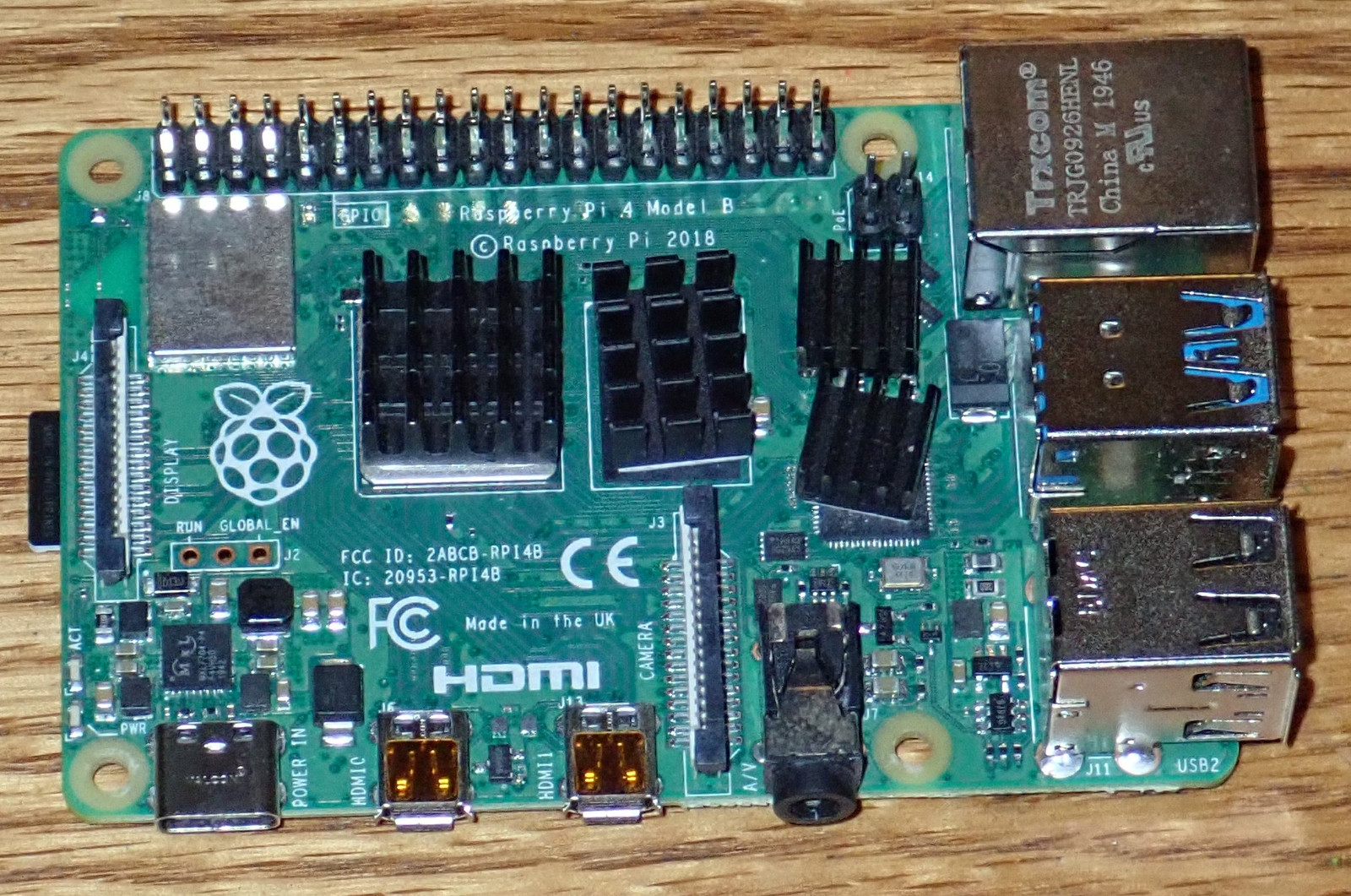

Raspberry Pi 4

The Raspberry Pi 4 used to be the “standard reference SBC” for comparisons, but anymore, they’re impossible to find for anything near a sane price - and I sold one of my 8GB Pi4s for… way too much money. So I’m using a 4GB unit I keep around for roughly these purposes. SD card only, quad A72s at 1.5GHz, 4GB RAM, 64-bit OS. It pains me greatly to say it, but with 8GB Pi4s going for $150-$200 on eBay, there’s no reason to buy one anymore for general desktop use. I know supply chains, demand, whatever, but the reality is that a Pi4 is not worth that kind of money. Sorry. Sucks, but that’s where things are.

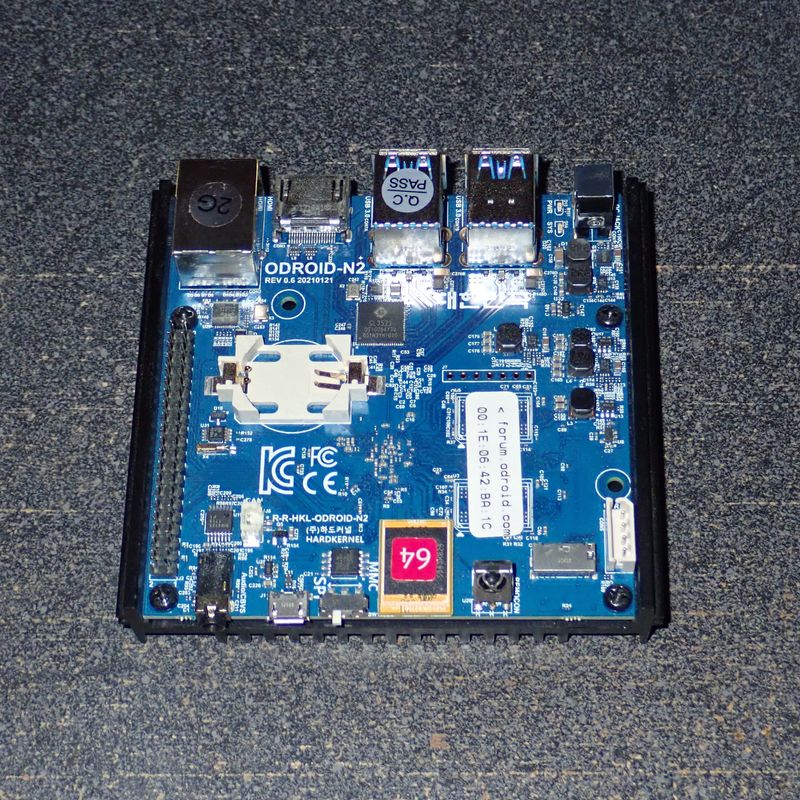

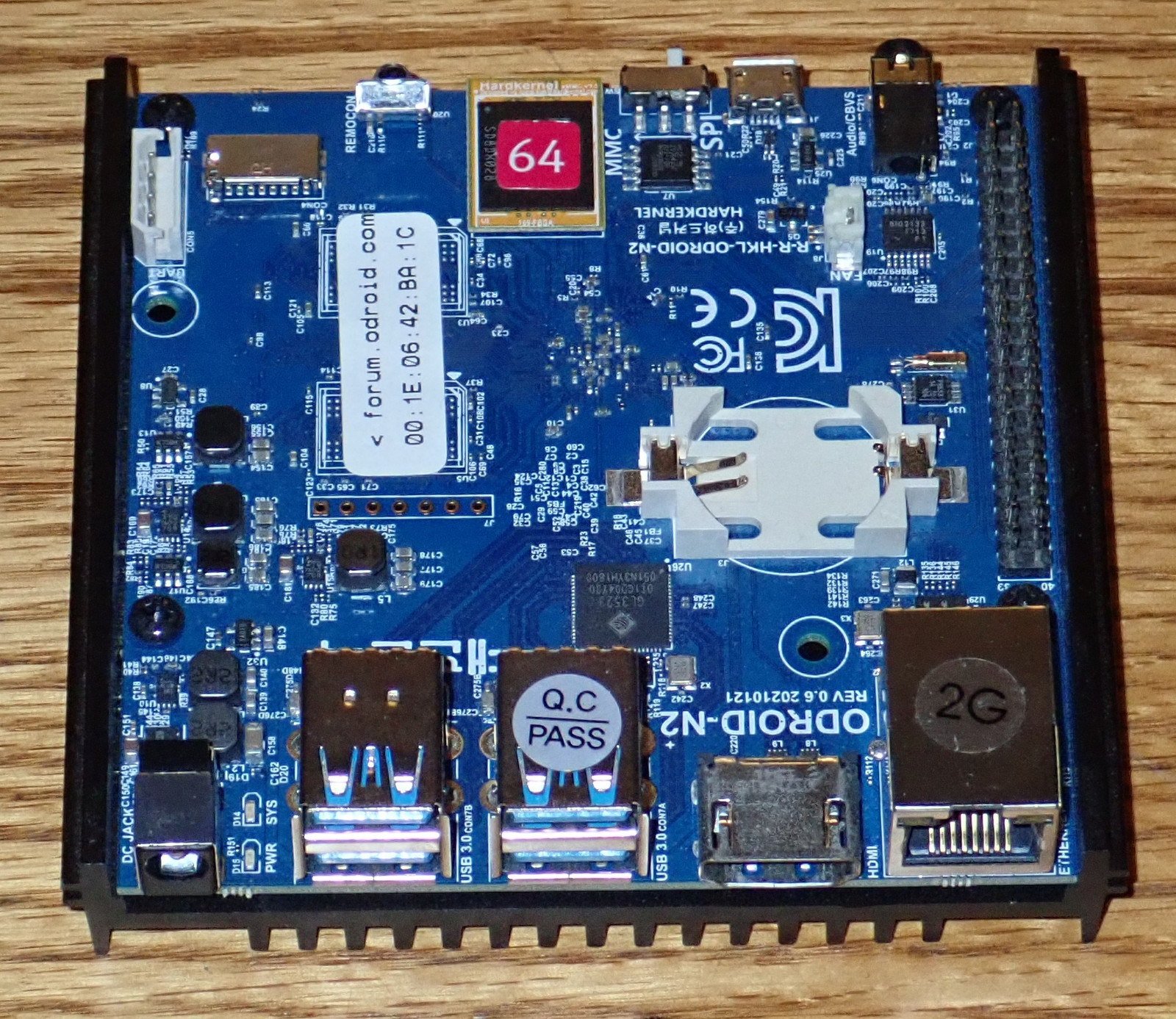

ODroid N2+

The ODroid N2+ has been serving as my “Pi Replacement” office desktop systems for a while, and I have to say, it’s come a long ways in terms of daily usability. With older kernels, they sucked to use, but lately… they really do just sort of sit there and work. I’ve got no complaints about them, beyond the lack of RAM. Quad A73s at 2.4GHz, and a pair of A53s at 2.0GHz, up to 4GB RAM, and eMMC support that’s not halfway bad.

PineBook Pro

The PineBook Pro remains a boring, serviceable laptop. Nothing has really changed. They fixed the utterly horrid trackpad with a firmware update, and mine is a “toss in the bag laptop” for just about everything. Battery life remains insane, and CPU performance isn’t too bad. It’s rocking a pair of A72s at 1.8GHz (stock - mine are up at 2GHz with no issues), with four low power A53s at 1.4GHz, 4GB of RAM, and again, eMMC storage.

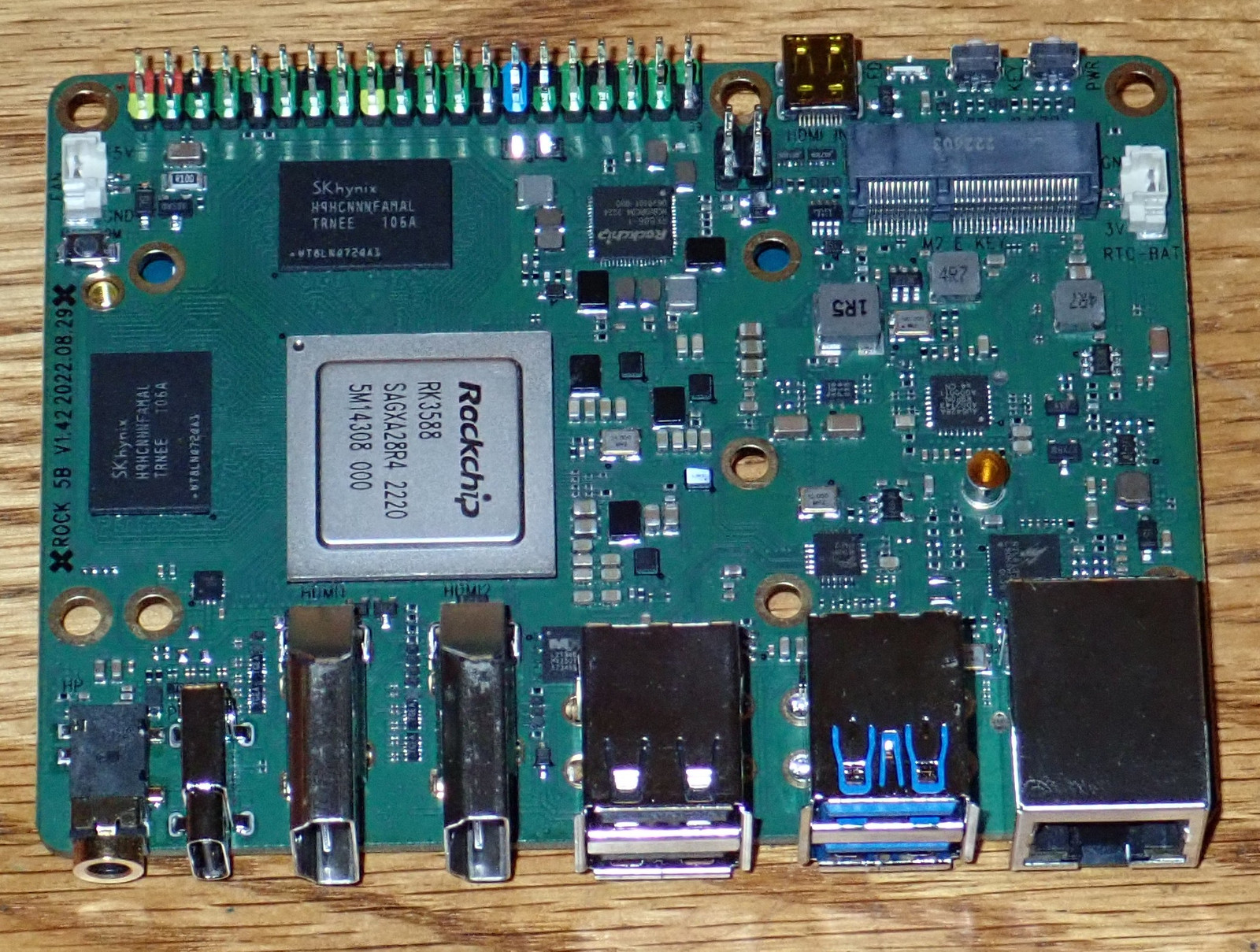

Rock5B

I have been… uncomfortably excited about this system since I learned about it, and I’ve got a few in my grubby little paws now. This is, per the paper specs of the SoC, The Future of ARM SBCs. It’s based around the RK3588 SoC, and it’s swinging four A76 cores at 2.4GHz, four A55 cores at 1.8GHz, and a whopping 16GB of RAM (with some respectable bandwidth behind it). Plus NVMe support for storage! On paper, it should be a solid performer… but at the start of 2023, software support is poor. You’ve got the BSP (Board Support Package) kernel, and that’s about it. It’s not a good kernel, and graphics performance seems to be entirely sub-par (to the point that I’m not even testing it). But the CPUs are there, and so it’s in the mix. There are a bunch of RK3588 based SBCs coming out, and I’d expect them to all perform more or less the same - so, if you’re curious about some other board with the same chip, I’d expect very similar results.

Seriously, NVMe! On an ARM SBC! Is it any good? In the words of the Kool-Aid Man, “Oh Yeah!”

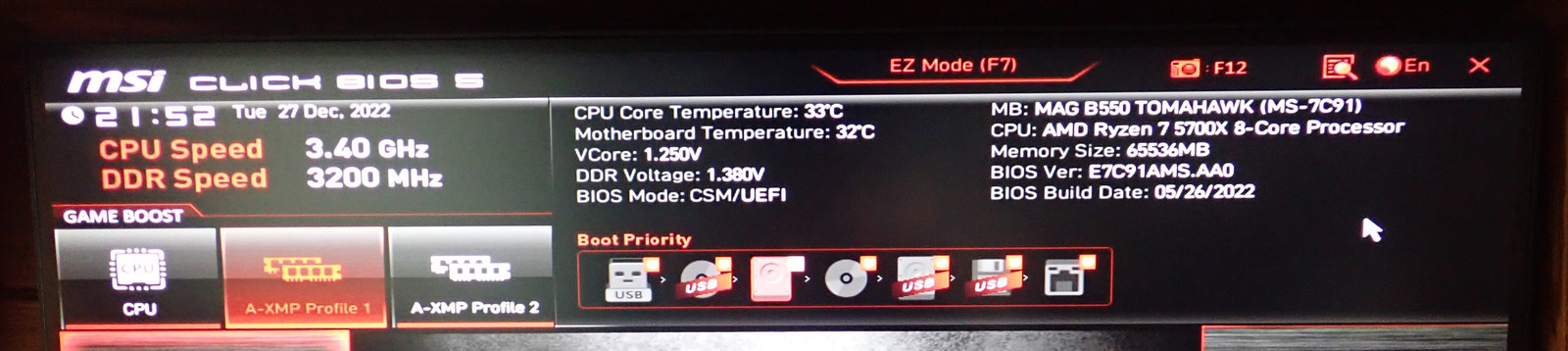

Ryzen 5700X

This year, I’m adding the house desktop into the comparisons, because the Rock5 needs something a bit stouter to compare against. It simply slaughters the rest of the ARM SBCs across the board, so I’ve run some tests on the house PC. This is a Zen 3, 5700X based system with 64GB RAM, NVMe, the works. It’s not a low end system, and I’ve included it in most of the tests to demonstrate just how far the ARM SBCs are coming. It’s worth noting that hyperthreading on my 5700X is disabled - it’s nominally an 8C/16T processor, and I run it as an 8C/8T processor. So some of the throughout performance may be a bit lower than otherwise achievable on it - but that’s how I run my systems.

Here’s the really awkward part of it. The Rock5, running multicore Geekbench, single core, pulls 3-5W. Multicore, it pulls about 5-8W for most of it, peaking briefly at about 11W. The house desktop pulls 140W under single core GeekBench, and up around 190W for multicore. Ouch. As you’ll see, the Rock5 delivers a substantial fraction of the performance on a tiny fraction of the power - so keep in mind the Rock5 is pulling its performance on about 6% the power use.

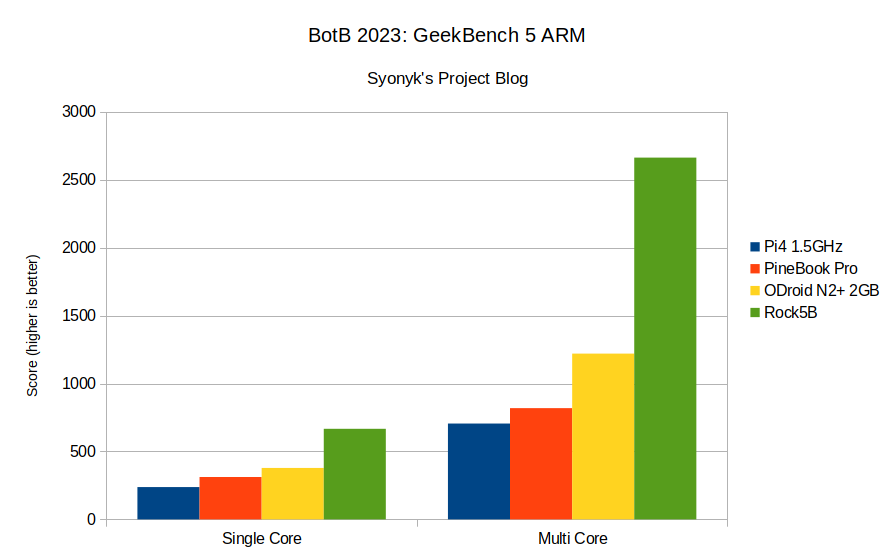

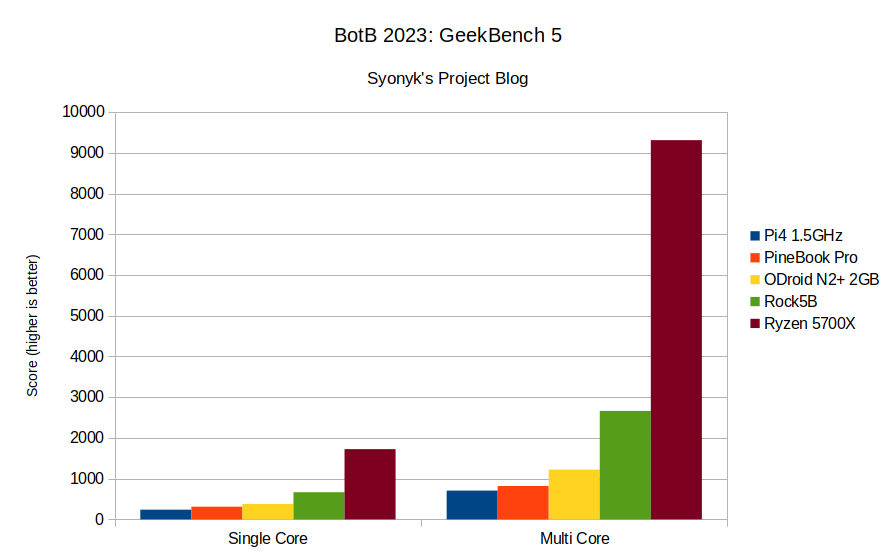

GeekBench 5

We all know and love GeekBench, right? It’s a benchmarking utility that runs a range of tests on various systems, doing the same work on different platforms. There’s a beta out for ARM Linux, and I’ve finally gotten around to methodically running it on all my little systems. It measures both single threaded performance and “max out the system” sort of peak throughput - and, importantly, it’s cross platform. So you can compare the ARM SBCs to things like the latest iPhones, to powerful x86 desktops, to Core 2 Duo laptops. The short answer here is “Holy crap, Apple’s nailing it!” - but, outside that ecosystem I’m increasingly unhappy being in, the results are useful enough as a general idea of raw CPU performance.

For most of these benchmarks, I’m going to put up the ARM SBC benchmarks, and then a separate chart comparing with the house desktop - because while it’s a lot faster than most of the SBCs, it gives a sense of scale for just how well the Rock5 does on 10 watts.

In what’s going to start being a familiar pattern, the Pi4 comes in slowest, followed fairly closely by the PineBook Pro and N2+, with the Rock5 just running off with the scores. The N2+ isn’t a half bad little system (one has been my desktop in my office for a while), and the Rock5 turns in 1.75x the single threaded performance, and 2.17x the multithreaded performance. Eight cores vs six isn’t hurting, but there’s a lot more system throughput to be had.

Tossing the AMD box in the mix, on 5% the power, the Rock5 delivers 39% the single threaded performance and 29% the multithreaded performance. Not half bad!

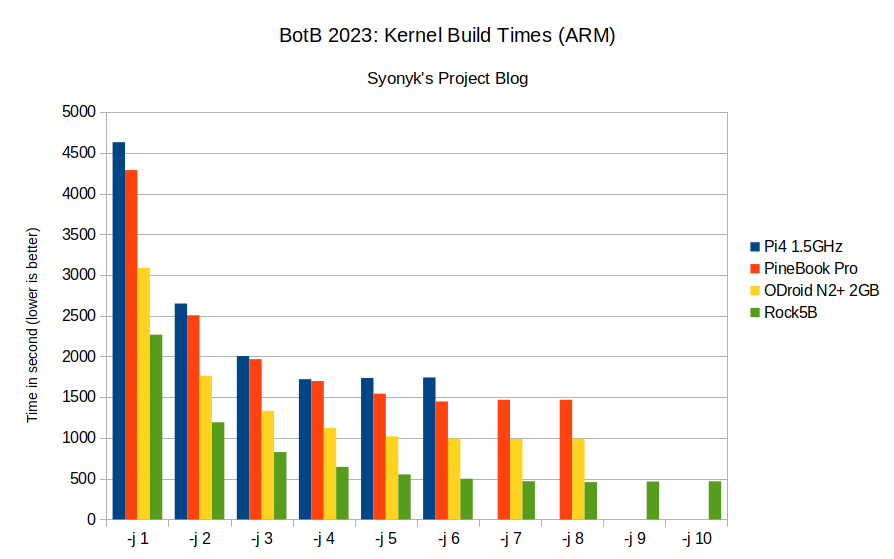

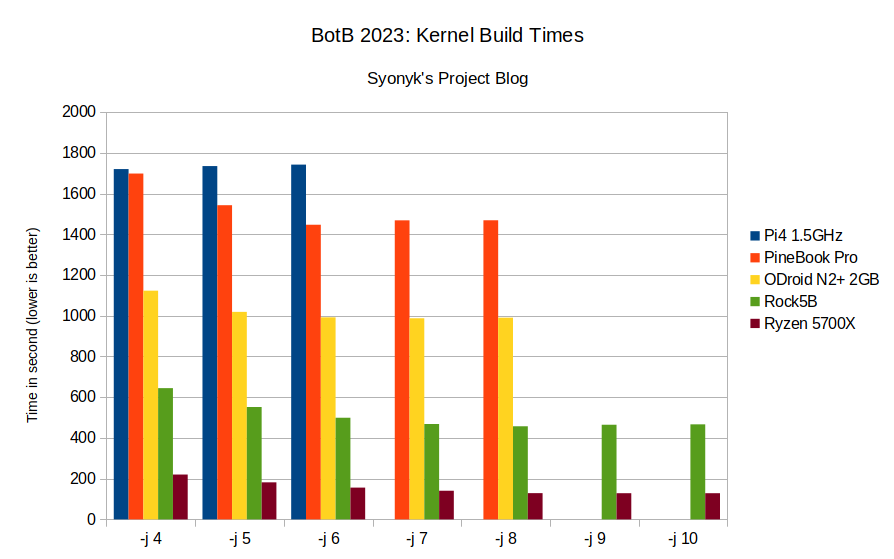

Kernel Build Benchmark

In the past, I’ve used some kernel build benchmarks to compare systems, but this year, I’m formalizing the system. I’m building, for all systems, the bcm2835_defconfig as a 64-bit kernel. Everything is running 64-bit native OSes, so for the few x86 machines in the test, they’re cross compiling, with the ARM systems running their native compilers. But, further, I’m testing the build at various widths - the “-j” value on make. I run it to “CPUs + 2” - so up to 8 threads on a 6 core system. The low counts stress peak single threaded performance, and the higher parallel options stress total system compute throughput (which, often enough, ends up RAM bandwidth limited). And we can compare the results!

Yes, the Rock5 turns in half the single threaded kernel build time of the Pi4, and just keeps rocking along from there. Builds were in my office, which is properly cold this time of year, so there was no thermal throttling, though I did have a heatsink laying on top of the Rock5 - it made a very minor difference. I’ll dive into these results more in another post, but peak performance came, in almost all cases, with “-j” equal to the CPU core count. There are some subtle differences plus or minus a core depending on the system, but it’s only a few percent. The bulk of the performance is clearly from the performance cores on all the systems - the efficiency cores add a bit, but not nearly as much as the performance cores.

Adding the x86 box in (and focusing on the more parallel builds to avoid the slow single threaded performance from drowning out the signal), the Rock5 takes about 4x longer than the x86 box - which is using about 17x the power. Huh.

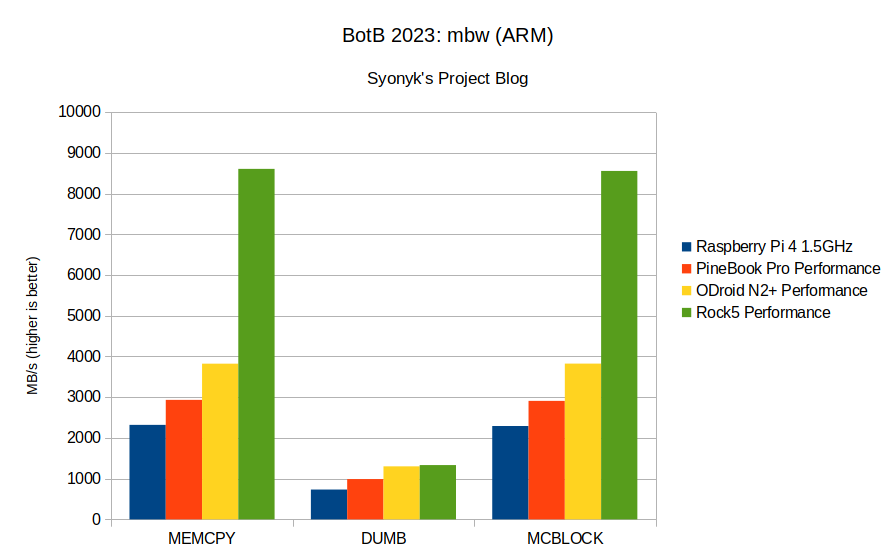

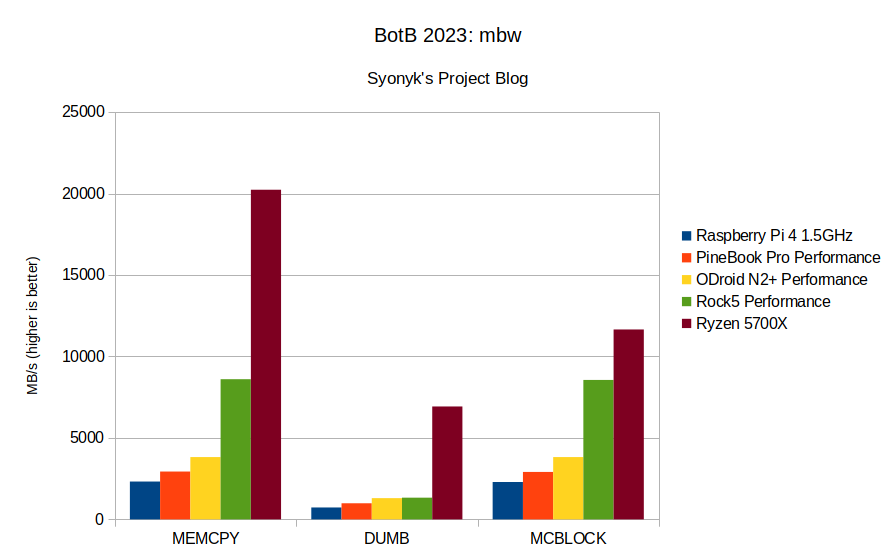

MBW

Memory Bandwidth. A friend of mine who knows what he’s talking about has settled on memory bandwidth as the key to modern system performance, and I generally agree with him. All the execution guts in the world don’t matter if you can’t feed them, and modern workloads benefit from a lot of cache. Which, of course, small board computers lack. But mbw does some “bulk copies” and returns reasonable results of the sort of memory performance you can expect out of main memory. These results are in bytes copied - so a read and a write is counted as “1 byte.” On the machines as have performance and efficiency cores (so everything but the Pi4 and 5700X), these are the results from the performance cores. The efficiency cores have lower bandwidth (typically), but I’ll be covering those in some posts going into more detail on the individual systems and how I have them set up.

These posts used to be more interesting, with small incremental changes between new boards - 20% here, maybe a 30-40% improvement somewhere. Not doubling the next best system (though I’ve no idea why the “dumb” copy performance isn’t improved - I should dig into that more). If memory bandwidth is king, it’s no question why the Rock5 seems to perform well!

Comparing with the AMD box, again, the Rock5 delivers a useful fraction of the full desktop machine’s memory bandwidth on a tiny fraction of the power. Though, clearly, something on the Zen cores is handling the “dumb” copy better - which isn’t surprising. An awful lot of the x86 frontend is dedicated to various things that all sum up as “make stupid code fast.”

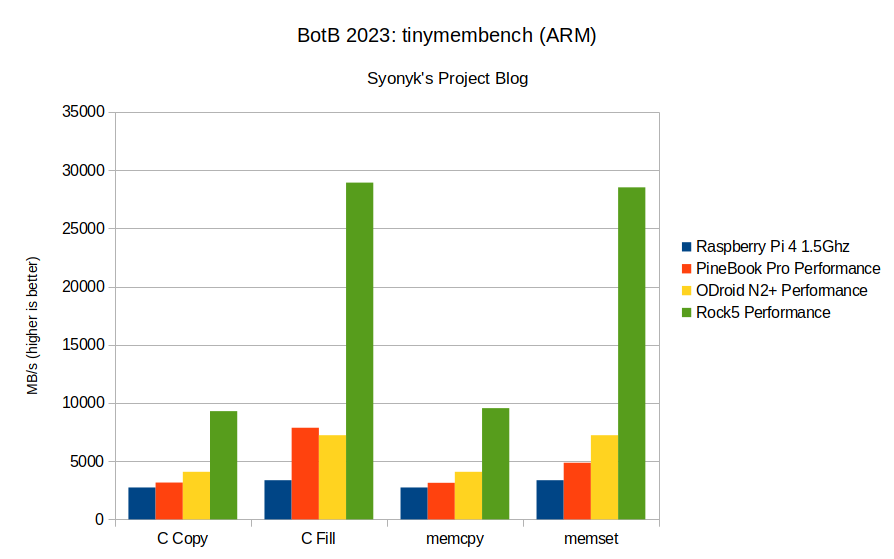

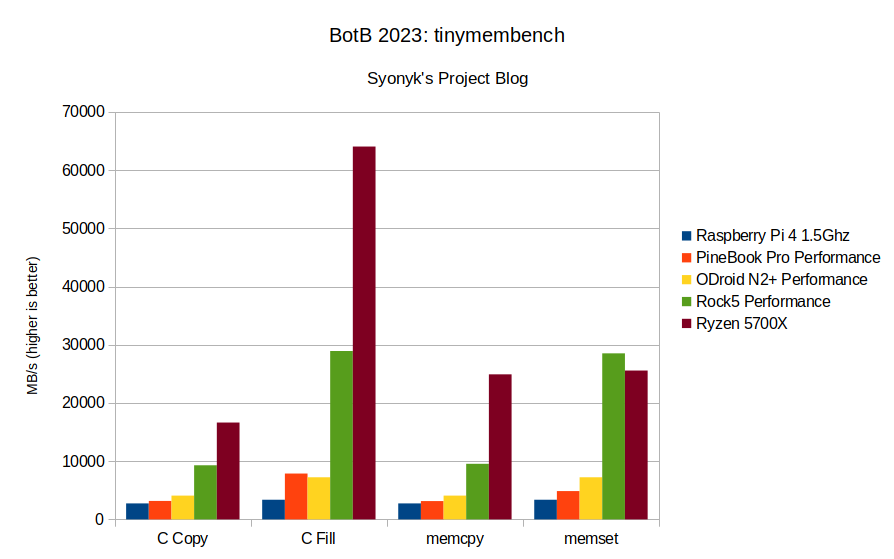

TinyMemBench

But, diving deeper into how to move memory around, I’m also making use of some results from TinyMemBench. This is a far more comprehensive memory test suite, aimed at really extracting the maximum performance from a memory system. I’m using a few of the results from it as a representative sample of bandwidth across the board. It tests vector copies as well, but there were no particularly surprising results there (the regular CPU instructions seem to be able to max out the bandwidth just as well as the vector instructions). However, this also includes some “fill” tests - not copying data around, but simply setting memory to a particular value. Again, higher is better.

I’m not sure why I bother, you know the shape of the results before you even look at the chart…

Adding the desktop in changes nothing. Yes, the desktop box performs better… except in memset. Which, I’ve no idea what’s going on there, because there’s clearly some fill bandwidth to use, if it could be extracted. Libc issues, I suppose.

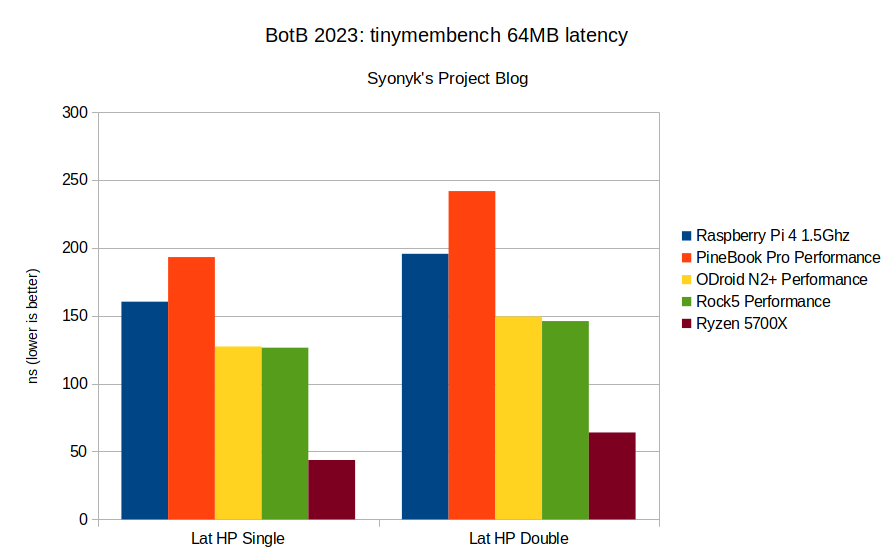

One of the other interesting tests that tinymembench performs is a latency test - and in this case, it’s “additional latency beyond L1 cache latency” for various size buffers. It shows off the cache system in some detail. The 5700X only has 32MB of L3 cache, so it should be out to main RAM, but clearly the latency is a lot better than on the ARM SBCs. Interestingly, the N2+ and Rock5 have about the same latency, despite the N2+ running DDR4 at 1320MHz and the Rock5 running it up at 2112MHz. The bandwith difference is evident, but the latency difference is almost zero.

Browser Benchmarks

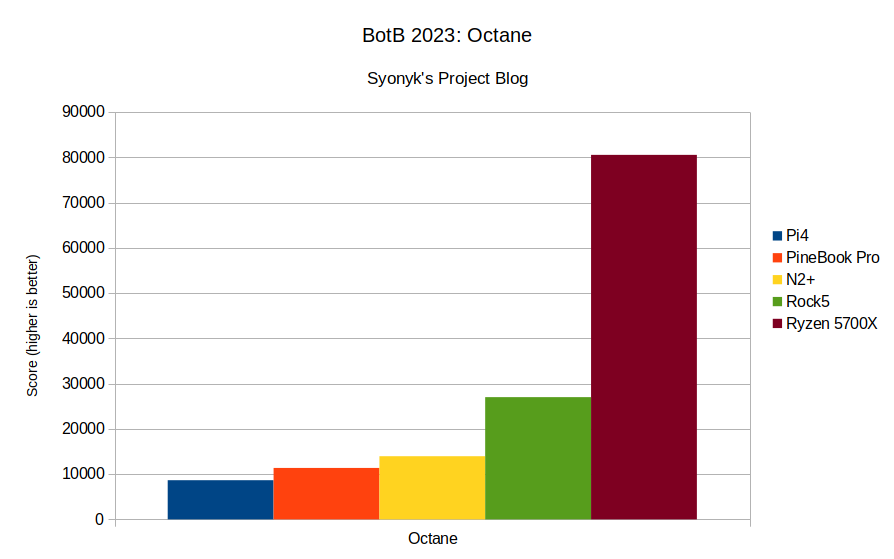

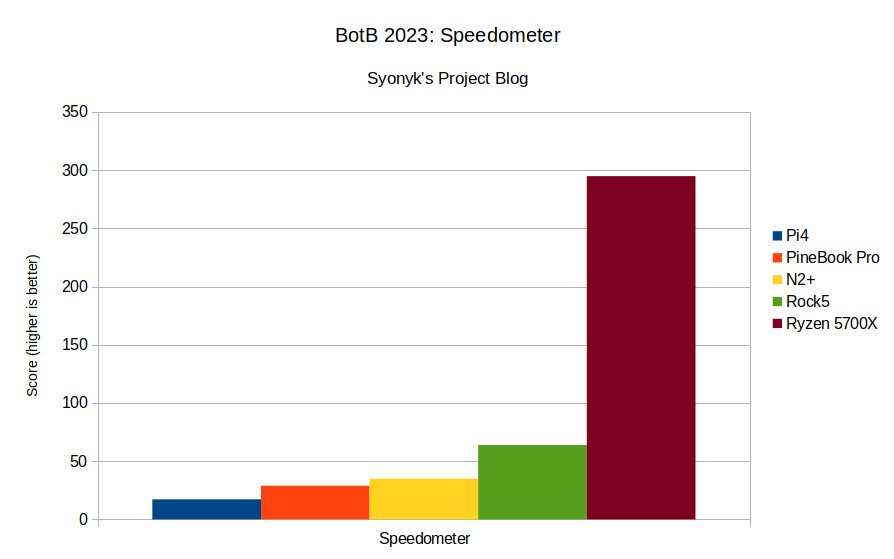

In the past, I’ve used a lot of Javascript benchmarks, and I’m moving away from that - because it mostly tests the JIT engine, and for reasons, I’m also moving away from JIT for Javascript. But I still think they’re useful as a reflection of how a system is likely to feel in heavy web use - which, unfortunately, remains a key part of modern computer use (for now). This year, I’m focusing on Octane and Speedometer. Octane is a Javascript compute benchmark, but Speedometer is a “full web rendering stack” benchmark - so it shows, more accurately, how the systems feel in performance. All this testing is done with Chromium 108.

There are no surprises here. The Rock5 crushes the rest of the ARM SBCs, and turns in some properly good efficiency results compared to the AMD desktop.

Speedometer reveals the downsides of not having good GPU acceleration - you can’t argue that the desktop has a more responsive browser, by some good margin. But despite that, I don’t actually notice a difference in practical use except on the really complex sorts of websites I don’t generally frequent. I will say that the New Reddit Abomination will make most of the ARM SBCs choke if you have Javascript enabled.

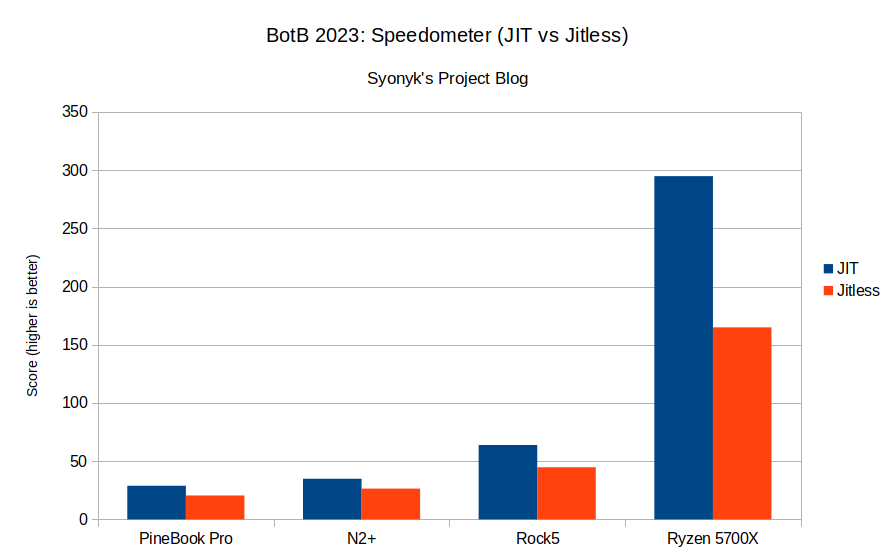

There will be more on this in the future, but it’s also very interesting to see what sort of “real world impact” disabling the Javascript JIT engine has on performance - and outside the pure JS compute benchmarks, less than you’d assume. To test the “jitless” performance, I add the flag, --js-flags="--jitless" to the command line for Chromium. It’s really, really not that big a difference!

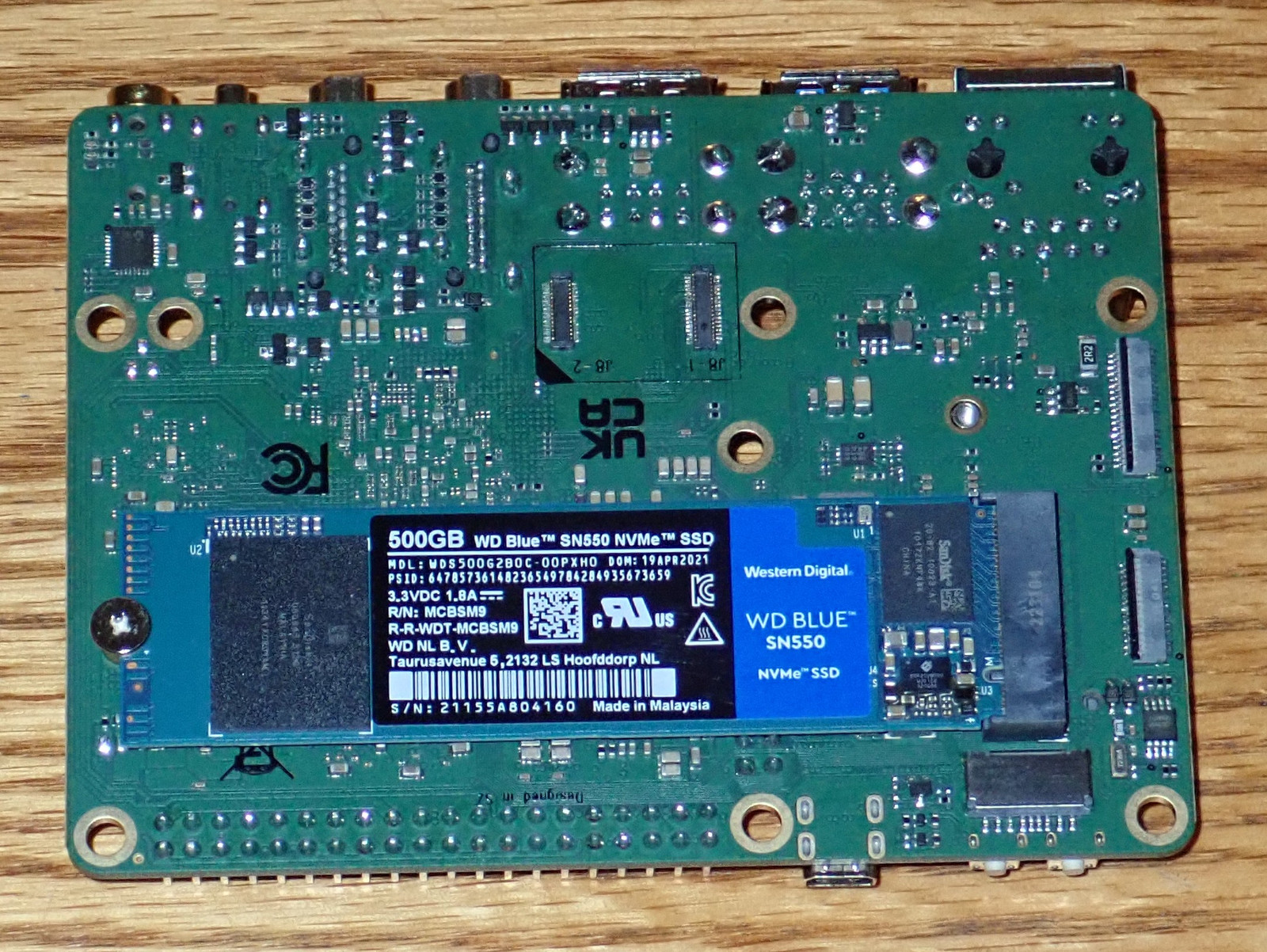

Disk Performance: NVMe Rules!

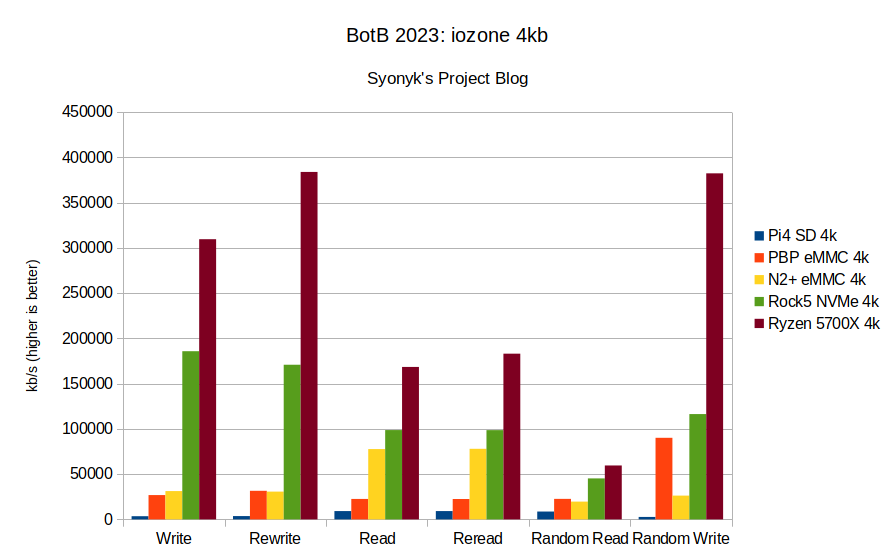

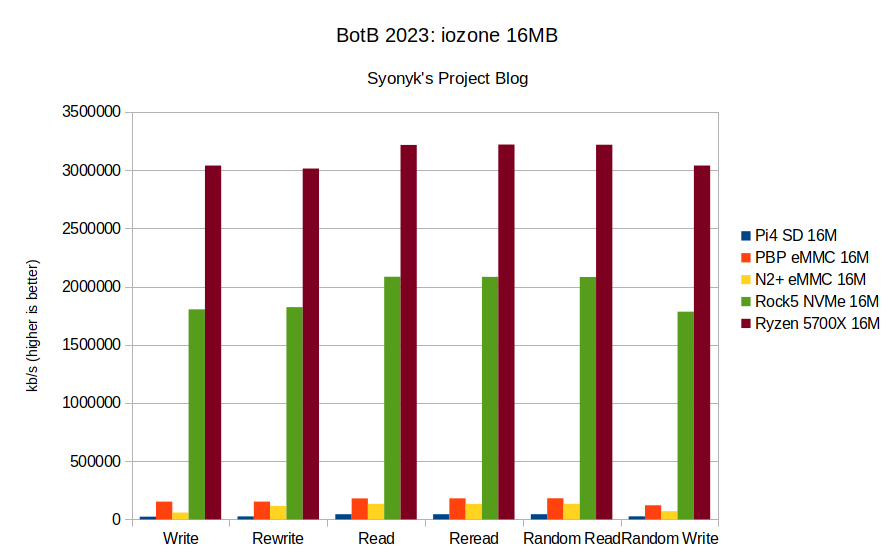

A lot of the previous benchmarks have focused on the difference between SD card performance (sucks) and eMMC performance (tolerable). Some people have gotten NVMe drives working on a Pi4, but even then they’re slow. The Rock5 has a real, honest NVMe interface - an x4, PCIe 3.0 interface. Performance should, as such, be epic. Even with a “slow, bargain” SSD like a Western Digital Blue. Is it?

Single threaded 4k block IO is hard for any device, but I’ve found it to be a good reflection of “teh snappy” in use - how fast a system can load new binaries into RAM and start executing them. NVMe drives benefit from large accesses and a lot of parallel stuff going on - and, as it turns out, the ARM SBC eMMC modules really do hold their own here for read performance! I expected a far larger gap between the eMMC modules and the NVMe drives, but especially the ODroid module holds up quite well. The house desktop’s higher end drive makes a big difference, but… look down there in random read - it’s not that large a difference in random 4k reads! The top of this chart is 450 MB/s.

But then we get to the bigger block reads, and here… no comparison. NVMe crushes all, as expected. This chart goes to 3500 MB/s - yes, 3.5 GB/s. And the Rock5 is north of 2GB/s on reads. No comparison.

Final Thoughts

Results here are clear enough in terms of raw CPU performance - the Rock5 is an absolutely vast step forward from the range of ARM SBCs we’ve seen in the past, showing off the might of the RK3588 SoC. The problem, of course, is that the software around that SoC is very, very beta. There’s a board support kernel, but there’s nothing resembling mainline support yet, the GPU drivers are exceedingly poor, and you’re living on the very bleeding edge with it.

But… does it matter? If you don’t need GPU accelerated performance for a desktop and are OK with a framebuffer for now, with the promises of a GPU accelerated desktop in the future, the Rock5’s performance is just nuts.

The N2+’s performance remains quite solid, and the kernel support for things like the GPU is better than it used to be, but under heavy load, it’ll end up in weird states where you can’t get new buffers for the GPU allocated, so it breaks in weird ways.

The PineBook Pro, with the fairly mature RK3399, manages to “just work.” It’s slower than the N2+ in raw performance, but in terms of daily usability, that’s just a nice little chip, and as long as you don’t run it too badly out of RAM, it does everything I want, at least. With long battery life in the deal.

And the Pi4? Well… I hate to say “sell it,” but if you’ve got one, now is the time to sell it for an absurd amount of money and get yourself something else to play with.

I’m going to be going into more detail on these boards, how I have them set up, and some more performance details in the posts to come - so there’s that to look forward to as well! Happy New Year!

As another note, I’m pretty certain I’ve fixed email subscriptions - so if you want to sign up to get notifications of new posts, try signing up in the form at the bottom of the post!

Comments

Comments are handled on my Discourse forum - you'll need to create an account there to post comments.If you've found this post useful, insightful, or informative, why not support me on Ko-fi? And if you'd like to be notified of new posts (I post every two weeks), you can follow my blog via email! Of course, if you like RSS, I support that too.