I didn’t have any particular posts planned for this week, but as is often the case, the news offered a useful topic: Apple’s upcoming ARM transition.

For years now, people have been discussing the possibility of Apple moving to ARM processors for their desktops and laptops. Their mobile devices have been ARM from the beginning, and with Apple’s modern phone processors giving Intel’s x86 offerings a solid competitor, it’s been an obvious question - but just an interesting one, until recently the rumors solidified and it sounds like this is a thing that’s going to happen.

I know my way around some deep processor weeds, so this has been interesting to me. There’s a ton of uninformed garbage on the internet, as usual, so I’ll offer my attempt to clarify things!

And as of Monday, I’ll probably be proven mostly wrong…

x86? ARM? Power? 68k? Huh?

If (when?) Apple makes this change, it’s going to be their third major ISA (Instruction Set Architecture) transition in their history (68k -> PPC, PPC -> Intel, Intel -> ARM) - making them pretty much the only consumer hardware company to regularly change their processor architecture, and certainly the largest to pull it off regularly.

The instruction set is, at nearly the lowest level, the “language” that your processor speaks. It defines the opcodes that execute on the very guts of the processor, and there have been quite a few of them throughout the history of computing. Adding two numbers, loading a value from memory, deciding which instruction to execute next, talking to external hardware devices - all of this is defined by the ISA.

In the modern world of end user computing, there are only two that matter, though: ARM and x86 (sorry, RISC-V, you’re just not there yet). Up until recently, the division was simple. x86 processors (Intel and AMD) ran desktops, laptops, and servers. ARM processors ran phones, tablets, printers, and just about everything else.

Except for a few loons (like me) who insisted against all reason on making little ARM small board computers to function as desktops, this was how things worked. But not anymore!

Intel and AMD: The Power of x86

For decades now, the king of the performance hill has been the x86 ISA - Intel and AMD’s realm (for within a rounding error - yes, I know there have been other implementations, I’ve owned many of them, and they don’t matter anymore). Pentium. Core. Athlon. Xeon. These are the powerhouses. They run office applications. They run games. They run the giant datacenters that run the internet. If it’s fast and powerful, it’s been x86. Intel is the king, though AMD has made a proper nuisance of themselves on a regular basis (and, in fact, the 64-bit extensions to x86 you’re probably running were developed by AMD and licensed by Intel).

Desktops, laptops, servers. All powered by the mighty x86. From power sipping Atom processors in netbooks to massive Xeons and Opterons in data centers, x86 had you covered. It’s been the default for so long in anything powerful that most people don’t even think about it.

But the x86 ISA is over 40 years old (introduced in 1978), and it’s accumulated a lot of cruft and baggage over the years. They’re maddeningly complex, with all sorts of weird corners and nasty gotchas - and that’s just gotten worse with time. MMX. SSE. VMX. TSX. SGX. TXT. SMM. You either have no idea what those are, or you’re shuddering a bit internally.

All of it adds up. Go read over the Intel SDM for fun, if you’re bored. It’s enjoyable, I promise - but it’s also pretty darn complicated.

ARM: Small, Power Efficient, and Cheap

Historically, ARM has been the lower power, far cheaper, power efficient alternative to x86. The ARM company releases CPU cores that most people use (A series in phones and laptops, if you know what M or R series cores are, you don’t need this blog post). They’re a set of compromises - fairly small in terms of die area (so “cheap to make”) and power efficient. They’re not focused on blazing fast performance. But they’re efficient, usually good enough, and as a result they’ve ended up in just about everything that’s not a desktop or laptop.

I’ve had a habit of messing around with these chips in things like the Raspberry Pi and Jetson Nano - they are good enough, for radically less money than a flagship Intel system.

In the last year or two, ARM chips started firing solid cannon blasts across the bow of the Intel Xeon chips in the datacenter. If you don’t care about raw single threaded performance, but care an awful lot about total throughput and power efficiency, ARM server chips are properly impressive. For less money than a Xeon, you can have more compute on fewer watts. Not bad!

They’ve made their way into laptops and desktops, but they’ve not been a threat to the flagship performance of Intel.

Except… there’s Apple. Who, on occasion, is totally insane.

Well… or not. Apple’s ARM: Big, Power Efficient, and Fast

Most companies just license ARM’s cores and attach their desired peripherals to them.

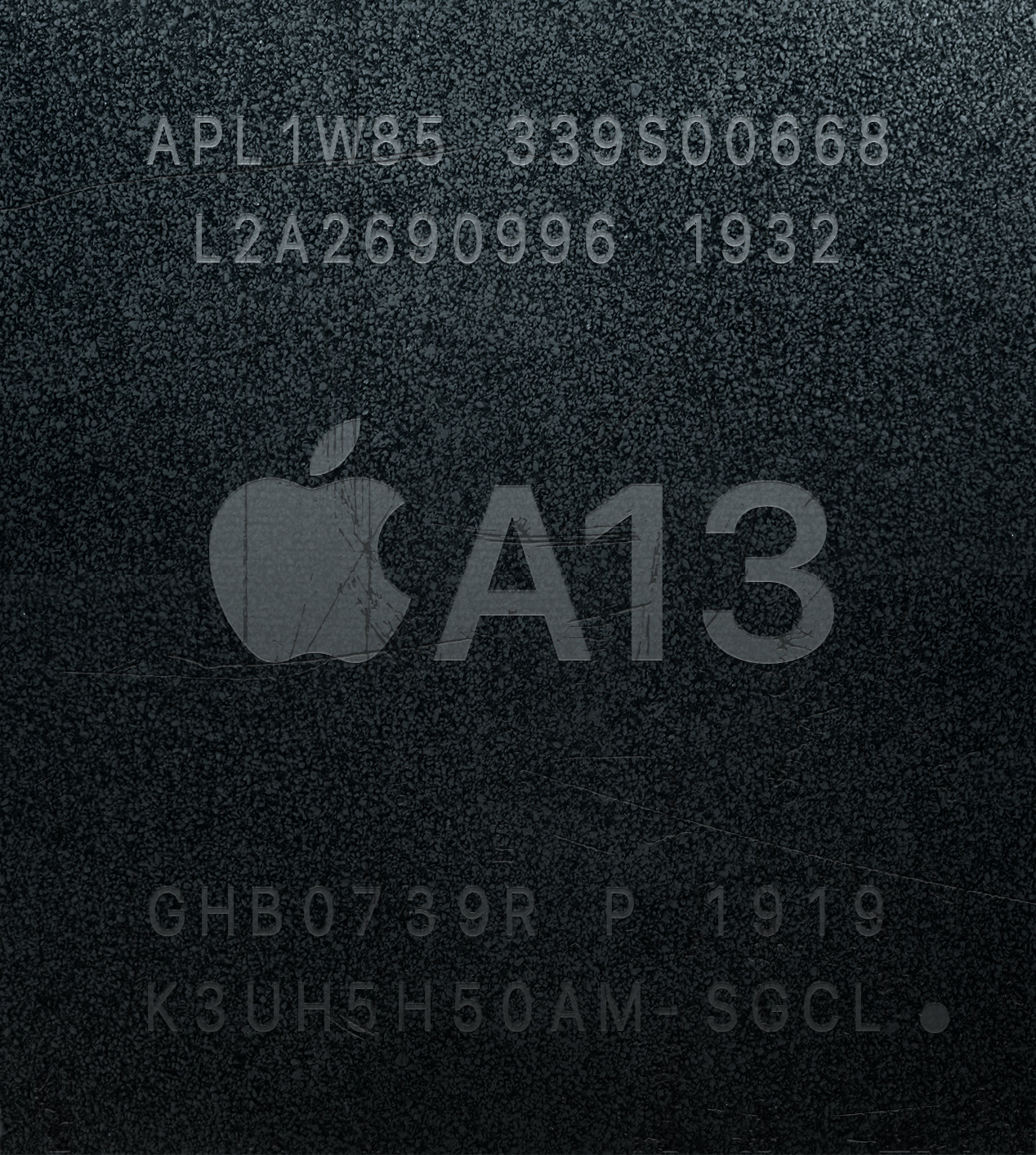

But a few companies have the proper licenses and engineering staff to build their own, totally custom ARM cores. Apple is one of these companies. Starting with the A6 processor in the iPhone 5, they were using their own custom processor implementations. They were ARM processors, sure, but they weren’t the ARM processor implementations. The first few of these were fairly impressive at the time, but still were clearly phone chips. And then Apple got crazy.

If you own an iPhone 11, it’s (probably) faster than anything else in your house, in terms of single threaded tasks - on a mere few watts!

Apple has quietly been iterating on their custom ARM cores, and has created something properly impressive - with barely anyone noticing in the meantime! Yeah, the review scores are impressive, yeah, modern Android devices are stomped by the iPhone 8 on anything that’s not massively parallel, but they’re phones. They can’t threaten desktops and laptops, can they?

They can - and they are.

A laptop has a far larger thermal and power envelope than a phone. The iPhone 11’s battery is 3.1Ah @ 3.7V - 11.47Wh. The new 16” MacBook Pro has a 100Wh battery - 8.6x larger. Some of that goes to the screen, but there’s a good bit of power to throw at compute. And if you plug a computer in, well, power’s not a problem!

This is interesting, of course - but then there’s how Intel has found themselves stuck.

Intel: Stuck on 14nm with a Broken uArch

If you’ve kept up with Intel over the last few years, none of this will be a surprise. If you haven’t, and have just noticed they regularly release new generations of processors, pay attention now.

Intel has been stuck on their 14nm process for about 4 years. Their microarchitecture is fundamentally broken in terms of security. And, worse, they can’t reason about their chips anymore.

Yes, they’ve been iterating on 14nm, and releasing 14nm+, 14nm++, 14nmXP, 14nmBBQ, and they’re improvements - but everyone else has leapfrogged them. Intel used to have an unquestioned process technology lead over the rest of the tech industry, and it showed. Intel chips (post Netburst) used less power and gave more performance than anything else out there. They were solid chips, they performed well, and they were worth the money for most cases. But they’ve lost their lead.

Not only have they lost their lead, their previous chips have been getting slower with age. You’ve probably heard of Meltdown and Spectre, but those are only the camel’s nose. There has been a laundry list of other issues. Most of them have fixes - some microcode updates, some software recompilation, some kernel workarounds… but these all slow the processor down. Your Haswell processor, today, runs code more slowly than it did when it came out. Whoops.

Finally, Intel can’t reason about their chips anymore. They seem unable to fully understand the chips, and I don’t know why - but I do know that quite regularly, some microarchitectural vulnerability comes out that rips open their “secure” SGX enclaves - again. In the worst of them, L1 Terminal Fault/Foreshadow, the fix is simple - flush the L1 cache on enclave transition. It’s an expensive enough world switch that the hit isn’t too bad, but Intel didn’t know it was a problem until they were told.

So, why would you want to be chained to Intel anymore? There just aren’t any good reasons. There’s AMD, who has caught up, but I just don’t see Apple moving from one third party vendor to another. They’re going to pull it in-house - which should be very, very interesting.

Apple’s ARM Advantages

Given all this, why would Apple be considering ARM chips for their Macs?

Because it gives them better control over the stack, which should translate, rather nicely, to a better user experience (at least for most users).

They’ll be able to have the same performance on less power - and, in the bargain, be able to integrate more of their “stuff” on the same chip. There won’t be a need for a separate T2 security processor when they can just build it into their main processor die. They won’t be stuck dealing with “Whatever GPU abomination Intel has dumped in this generation” - they can design it for their needs, with their hardware acceleration, for the stuff they care about (yes, I know Intel isn’t as bad as they used to be, but I sincerely doubt Apple is happy with their integrated GPUs).

Fewer chips means a smaller board - which generally means less power consumption or better performance.

One thing I’ve not seen mentioned other places is that Apple is literally sitting on the limit of how much battery they can have in a laptop. They claim their laptops have a 100Wh battery - with a disclaimer.

What, you might ask, is wrong with a 100Wh battery, such that there’s a disclaimer saying that it’s actually 0.2Wh less?

There are a lot of shipping (and carry on luggage) regulations that use 100Wh as a limit somewhere - and they’re usually phrased, “less than 100Wh” for the lowest category. Apple is being super explicitly clear that their laptops are less than 100Wh - even if they advertise them as being equal to 100Wh. USPS shipping, UPS, TSA… all of these have something to say about 100Wh.

Apple literally can’t put more battery in their laptops. So the only way to increase runtime is to reduce the power they use. Their ARM chips almost certainly are using less power than Intel chips for the same work.

Or, a smaller, lighter laptop for the same runtime. Either way, it’s something that sets them apart from the rest of the industry, who will be stuck on Intel for a while longer. Microsoft’s ARM ambitions have gone roughly nowhere, and Linux-only hardware isn’t a big seller outside Chromebooks.

They also gain a lot from being able to put the same “stuff” in their iOS devices (which have a huge developer community, even if a lot of them are pushing freemium addictionware) as in their laptops. Think machine learning accelerators, neural network engines, speech recognition hardware. The gap between what an iPhone can do and what a MacBook Pro can do (for the things where the phone is better) ought to narrow significantly. We might even see a return of laptops with built in cell modems!

Apple tries to control the whole stack, and this removes a huge component that they don’t control. And it should be good!

Now, to address a few things that have been the subject of much discussion lately…

x86_64 on ARM Emulation

One of the sticking points about Apple switching to ARM is that they’re based on x86 right now - so, what happens to your existing software? Switching to ARM means that x86 software won’t work without some translation. It turns out, Apple has a massive amount of experience doing exactly this sort of thing. They made 68k binaries run fine on PowerPC, and they made PowerPC binaries run acceptably on x86. I fully expect they’ll do the same sort of thing to make x86 binaries run on ARM.

Emulating is hard, especially if you want to do it fast. There’s a lot of work in the JIT (Just In Time) space for things like Javascript, but if Apple does ship emulation (which I think they will), I’d expect a JIT engine to only be used infrequently. The real performance would come from binary translation - treating the x86 binary as source code that gets turned into an ARM binary. This lets you figure out when some of x86’s weirder features (like the flags register being updated on every instruction) are needed, and when they can be ignored. One could also, with a bit of work, probably figure out when ARM’s rather relaxed memory model compared to x86 will cause problems and insert some memory fences. It’s not easy, but it’s certainly the type of thing Apple has done before.

Plus, ARM has more registers. ARMv8 has 32 registers, and x86_64 only has 16 main architectural registers (plus some MSRs, segments… it’s a mess as you go deeper). That tends to make emulation a bit more friendly.

I have no doubt that the emulation will “go native” at the kernel syscall interfaces - they won’t be running an x86 kernel in emulation to deal with x86 binaries. This is well established in things like qemu’s userspace emulation.

What would be very interesting, though, and I think Apple might pull this off, would be “going native” at the library calls. If you’re recompiling a binary, you know when the code is going to call into an Apple library function (assuming the binary isn’t being deliberately obscure - which most aren’t). If they got a bit creative, emulated software could jump from the emulated application code to native library code. This would gain a lot of performance back, because all the things Apple’s libraries do (which is a lot) would be at native performance!

Remember, Apple has their own developer stack and can tweak the compilers as they want. It wouldn’t surprise me if they’ve been emitting emulation-friendly x86 for the past few years.

Of course, we’ll find out Monday.

Virtualization: Goodbye Native Windows Performance

The next question, for at least a subset of users, is “But what about my virtual machines?”

Well, what about them? ARM supports virtualization, and if all you want to do is run a Linux VM, just run an ARM Linux VM!

But if you need x86 applications (say, Windows 10)? This is where it will be interesting to watch. I fully expect solutions out there - VirtualPC, years ago, ran x86 Windows on PowerPC hardware. I just don’t know what sort of performance you can get out of full system emulation on ARM. Normally, things have been the other way - emulate a slow ARM system on a fast x86 machine. The performance isn’t great, but it’s good enough. Going the other way, though? Nobody has really tried, because we’ve never had really fast ARM chips to mess with. Of course, if Apple’s chips are 20-30% faster than the comparable Intel chips, you can spend some time on emulation and still come out even or ahead.

If you’re hoping to run some weird x86 utility (Mikrotik Winbox comes to mind as the sort of thing I’d run), I’m sure there will be good enough solutions. Maybe this will light a fire under Microsoft to fix their ARM Windows and emulator.

But for x86 games? Probably not. Sorry.

The ARM64 Software Ecosystem: Yay!

Me, though? I’m really excited about something that almost nobody else cares about.

The aarch64 software ecosystem is going to get fixed, and fast!

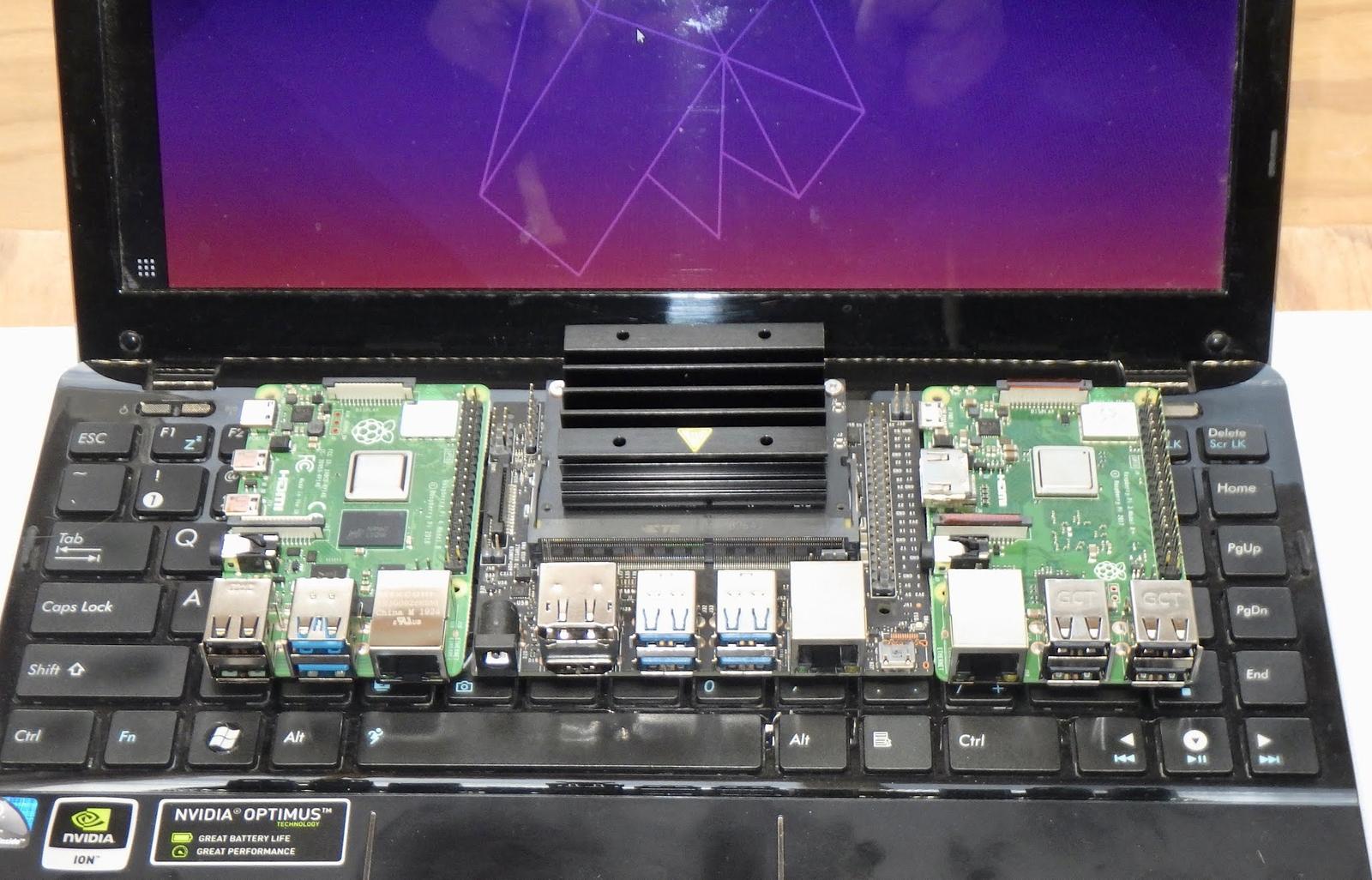

I’ve been playing with ARM desktops for a while now - Raspberry Pi 3, Raspberry Pi 3B+, Raspberry Pi 4, and the Jetson Nano. Plus a soon-to-be-reviewed PineBook Pro (which I’m typing this post on).

The Raspberry Pis have made the armhf/aarch32 ecosystem (32-bit ARM with a proper floating point unit) tolerable. That plus the ARM Chromebooks means that ARM on 32-bit is quite livable.

The 64-bit stuff, though? Stuff just randomly fails. Clearly, there is very little development and polishing on it, and you end up with things like Signal not building on 64-bit ARM, because some ancient dependency deep in the Node dependencies doesn’t know what aarch64 means (why it should matter in the first place… well, web developers gonna web developer).

I expect all of this to be fixed - and fast! Which is great news for light ARM systems!

Intel’s Future

Where does this leave Intel?

It depends a bit on just how good Apple is, and how soon Intel can get back on the tracks. If they iterate quickly, get their sub-10nm processes working, and fix their microarchitecture properly (instead of using universities as their R&D/QA labs), we should be in for a good decade of back and forth. Apple’s team is excellent, Intel’s team… well, used to be excellent, at least, and they’ve certainly both got resources to throw at it. As we crash headlong into the brick wall at the end of Moore’s Law, getting creative (ideally in ways that don’t leak secrets) is going to be important.

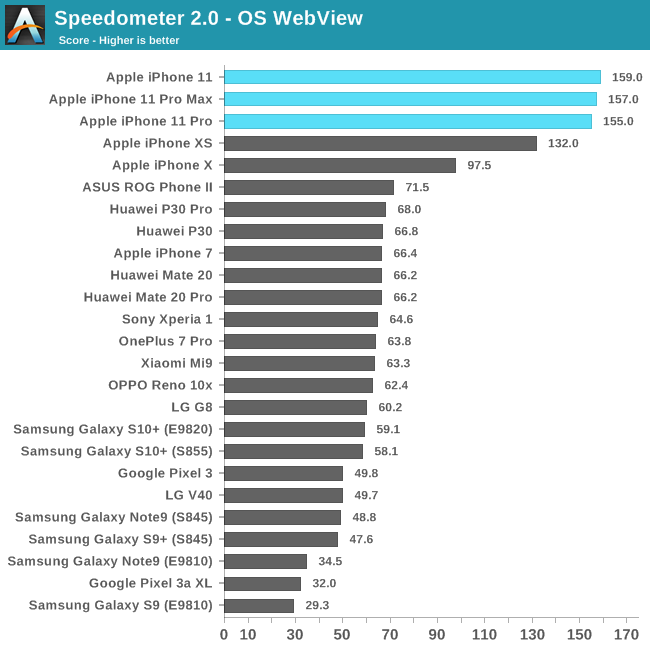

We could end up with something like the state of phones. Anandtech’s iPhone 11 review has benchmark charts like this entertaining test of web performance. Apple, Apple’s previous generation, Apple’s generation before that, a big gap, and then the rest of the pack. And, yes, they do mention that the iPhone 11 is a desktop grade chip in terms of SPEC benchmark performance.

Will it be this bad? Probably not. But it’s a possibility. It really depends on what Apple’s chip designers have waiting in the wings for when someone says, “Yes you can haz 35W TDP!”

But between Apple likely moving away from Intel, and data centers working out that the ARM options are cheaper to buy, cheaper to run, and faster in the bargain? Intel might have a very hard hill to climb - and they may not make it back up.

Coming up: More ARM!

If you’re enjoying the ARM posts, there’s more to come! My PineBook Pro arrived a few weeks ago, and I’ve been beating on it pretty hard as a daily driver. What makes it tick? How is it? How can we fix rough edges? All that, and more, in weeks to come!

Plus solar progress, if the weather ever agrees…

Comments

Comments are handled on my Discourse forum - you'll need to create an account there to post comments.If you've found this post useful, insightful, or informative, why not support me on Ko-fi? And if you'd like to be notified of new posts (I post every two weeks), you can follow my blog via email! Of course, if you like RSS, I support that too.